Jon Knight

This user hasn't shared any profile information

Posts by Jon Knight

Editing API

With EditDataElement under our belt, its time to turn our thoughts to more of the editing/creating side of the system. Gary, Jason and I had a sit down thinking session this afternoon and came up with four things we need an API for:

- Given a SUT ID (or a SU ID from which we can find a SUT ID), what SUTs can be children? We need this in order to produce a drop down list of possible SUTs that can be added under an SU in a editor,

- Given a SUT ID, return an XML structure describing the data type groups and data types that can be under it. This is needed so that an editor can work out what should be in the form, what format it has, etc,

- Create an SU with a given SUT ID (and possibly use the same API for editing an SU with a given SU ID?). This needs to be told the parent SU ID and check that the requested SUT ID is a valid child, and also that the user has permission to create children. If everything is OK it generates a new SU of the given type and links it to the parent SU in structural_unit_link table. It then has to copy the ACLs from the parent SU to the new SU before returning the new SU ID.

- An API for managing ACLs. Needs to allow new ACL lines to be added and existing ones changed and/or deleted. We had a talk about how we envisage ACLs working and it seems to be OK as far as we can see: ACLs on new SUs will by default inherit from the parent SU so that the same people have the same rights (ie if you can view or edit a reading list, you’ll be able view or edit a book attached to it). Users can remove ACLs for groups of the same rank or lower than they are (so academics can’t remove the Sysadmin or Departmental Admin groups rights for example).

This evening a first cut at the first two of those has been knocked out. This should let Jason play with front end form ideas for the editors, even if he can’t actually create new SUs yet. Creating SUs shouldn’t be a big deal (its very similar to EditDataElement) but the ACL management API is going to have to be carefully thought out, especially if we might want to add new capabilities in the future (for example a “can_create_children” ACL, so that you can edit an existing SU but not add structure beneath it, and maybe a “can_delete” one as well so that Academics can allow research students to tweak typos in their lists but not add or remove items). Another suggestion from Gary was a “can_publish” ACL type so that only specified folk can authorise the publication/unpublication of an SU (and its children).

Talking of deleting, we also tweaked the structural_unit table today by added two new attributes: deleted and status. The deleted attribute indicates whether an SU has been, well, deleted. We don’t want to actually do a database delete as academics and librarians have in the past had “ID10T” moments and deleted stuff they shouldn’t, and getting it back from backups is a pain. By having a simple flag we can allow sysadmins to “undelete” easily – maybe with a periodic backend script that really deletes SUs that were flagged as deleted several months ago.

The status attribute allows us to flag the publication status of the SU. By default this will start as “draft” and so we can ensure that student facing front ends don’t see it. When ready it can be changed to “published” which allows it to be viewed by guests normally (subject to ACLs of course). Lastly there is a “suppressed” status that is intended to allow published SUs to be temporarily unpublished – for example if a module is not running for one year but will need to reappear the next. Not entirely sure if the status attribute isn’t just replicating what we can do with ACLs though – I’ll need to chew that one over with the lads.

Surviving Database problems

The importation of LORLS v5 code has hit a snag: the object oriented nature of the Perl code created large numbers of connections and would eventually, after processing a largish number of reading lists, blow up because the MySQL database would not handle any more connections. A way round this is to replace the Perl DBI connect() method with a similar call to Perl’s DBIx modules – DBIx supports reconnections.

Some cunning tweaking of the BaseSQL init() method is also required so that we can keep on reusing the same database connection over and over again, as there’s little point generating a new one for each Perl module in use. BaseSQL now use a cunning Perl hack based on the behavour of unnamed embedded subroutines (ie closures) and variable scoping/referencing. This allows a connection to be set up if one exists, but also allows the database handle for the connection to be shared amongst all instances of the Perl modules that inherit from BaseSQL in a process. This means that the LUMP side of things only uses at most one MySQL database connection, and will reconnect if the database goes away (it’ll try 500 times before it gives up, so that covers the database being restarted during log rotations for example, which is what originally highlighted this problem).

However all is not rosy in the garden of SQL: the two old LORLSv5 modules that are needed to read the old reading lists can also generate large numbers of connections. I’m experimenting with closing the handles they create as soon as I’ve issued a new() method call and then telling them to use a hand crafted DBIx connection that is already set up. Seems to work but I keep finding more bits where it sets up new connections unexpectedly – not helped by the recursive nature of the build_structures LUMP import script. Aaggghhhh! 🙂

Creating Indexes

Whilst writing a little dev script to nullify Moodle IDs for Jason, I realised that things could be a bit slow sometimes searching for SUs when the system had a large number of reading lists in it. Once again I’d made the school boy error of forgetting to create indexes in the DB schema for the foreign keys in the various tables. To that end the schema now has indexes on any ID fields in any tables, plus a couple of obvious ones we might want to search on. We’ll probably have to add more in the future, especially on large tables (structural_unit, data_element, access_control_list, user, usergroup for example).

Also been trying to track down uninitialised variables in the build_structures LORLSv4 import script – it works at the moment but looks messy and I’d rather have properly error checking code.

The EditDataElement API and Moodle IDs

Today we added the first web API call that could actually change things in the LUMP database (as opposed to just reading from it). The driver for this came from integration with Moodle. Today we’d got a LUMP reading list to display from within Moodle, but we needed to be able to link a Moodle ID to a reading list SU. The resulting EditDataElement API call allows some flexibility in how the data element in question is specified. It could be by the data element’s ID, in which case the value is simply updated. However the client code might not know the data element ID or it might require a new one, so it can create/edit data elements by specifying the required new value, the ID of the SU that it is within and the data type name of the data element.

One subtle finese required is that some DTs are not repeatable but others are. For repeatable DTs the SU ID and DT name can be used to simply create a new DE. However for non repeatable DTs, a flag can be provided to indicate whether to replace any existing value. If a DE of the required DT already exists in this SU and this flag is not set to ‘Y’, a permission denied error is returned.

Tweaking output when rendering

Had to slightly change the output XML when using GetStructuralUnit CGI script API. Originally if asked for HTML the code would just generate the HTML (or whatever format) for each child to the required depth level and just concatenate it. However this meant that higher levels couldn’t make formatting decisions based on details of lower levels – for example reading list SUs need to know when to turn off/on list tags in HTML depend on if children are notes or book/journal/article/etc SUs. The tweak was to always have the child return a structure:

$child->{su}->{render} = formatted output $child->{su}->{id} = child SU's IDThe child SU's ID field lets the parent's code make more intelligent rendering decisions.

Deciding how to import LORLS materials into LUMP

Had to produce the rules to attempt to convert LORLS rather lax material descriptions into SUs of the appropriate type (book, book chapter, journal, journal article, electronic resource or note). This decision is currently made using a variety of hints. For example book chapters are distinguished from journal article by the lack of an issue number and if the partauthor field isn’t filled in, or if the control number looks like an ISSN rather than an ISBN. It probably isn’t perfect but hopefully it will get 90% of the works right. And as long as most of the rest look OK when rendered, most folk won’t notice anyway!

Acting as other users

We realised that we need to allow member of the Admin group to be able to act as other users. For example Moodle won’t know the user’s AD log in information but we still want LUMP to allow the Moodle logged in user to create, edit, delete and view LUMP objects as themselves (rather than just a default Moodle user). Thus we need to allow admin users to login with their own credientials (using one of the supported authentication mechanisms) and then switch to act as another user. In the web API this is achieved by passing an “act_as” CGI parameter filled in with the username that the script should appear to be running as. Currently only members of the Sysadmin group will have this power (which will need to include the Moodle block as “trusted” code).

Why formatting Perl is held in the database

The current ER model includes tables that hold formatting information in order to allow the layout of reading lists in different formats (HTML, BibTeX, Refer, etc). This formatting information is currently planned to be snippets of Perl code, allowing some serious flexibility in formatting the output.

However the question is, “why is this code in the database?”. Here at Loughborough we could just as easily have it stored in the filesystem along with all the other Perl code for the back end. The reason for having the Perl fragments held in the database is that it will potentially make life easier at other institutions, where there is a separation between the folk who run servers and install the software, and the folk who actually manage the library side of the database. In some cases getting software updates loaded on machines can be tricky and time consuming, so having the formatting code held in the database will allow the library administrators to tweak it without having to jump through hoops.

REST versus SOAP

We want LUMP to have a well separated client-server architecture, so the we can deploy several different types of front end and also so that third party developers can implement their own front (and back!) ends independently. We’ve pretty much decided on using XML to return the results (as most client programming environments have some sort of XML handling these days, and if the worst comes to the worst there are network XSLT transformation services that can turn the XML into renderable HTML). However we needed to decide how to send requests from the client to the server in the first place.

The initial plan was to have clients lovingly hand craft XML documents for the request and then send these as a single parameter to a CGI script. This XML document would contain all the authentication, version, metadata for the LUMP protocol, as well as the method being requested and any parameters required. It would work OK, but meant that the clients needed to generate an XML document, even for a relatively simple request.

Next we considered the Simple Object Access Protocol (SOAP). SOAP basically does the XML packaging of parameters and results in a standardised manner that is supposed to allow different programming languages on different platforms to remotely access objects and methods as though the were part of the local application. That’s the theory at least. SOAP works better when coupled with the Web Services Description Language (WSDL) but there’s also where things start to come unravelled.

Firstly WSDL appears to have real difficulties in handling dynamic data structures. Most of the examples of WSDL on the web pass a string or two into a routine and then get out a single string, floating point or integer. But we’d like to return far more complex data structures such as nested hash of hashes, arrays of hashes, etc, etc. We could describe those in terms of an XML DTD for our hand crafted responses but not easily in WSDL for use with SOAP. WSDL also imposes an extra transfer over head on clients and/or requires that the client cache the WSDL file (which makes developing a pain, where the WSDL would have to be in constant flux normally and thus uncachable).

There were also some practical problems with SOAP. The main development of the LUMP server is likely to be in Perl and, whilst there is a SOAP Perl module available, its nowhere near as well developed as many of the other Perl modules available. Secondly we found that sometimes we could get SOAP to work with, say, Perl, but then not work with PHP (PHP being the other major target language, this time for the Moodle client block). Fixing access for the PHP client could then cause problems for the Perl client. Why things some times worked and sometimes didn’t wasn’t particularly clear and therefore this didn’t show SOAP to be particular platform independent. And debugging the SOAP transactions was also very hard – even with debugging turned on in the SOAP libraries the interactions can be very complicated and you end up having to sit with the SOAP specification trying to work out which bit of the libraries that were supposed to make life easy for you were actually making it much harder!

So we opted for a third choice, and one we were already quite familiar with: REpresentational State Transfer (REST). APIs that use REST (so called “RESTful” APIs) make use of the existing web infrastructure such as HTTP, SSL, web server authentication techologies and CGI scripts. A RESTful API uses a base URI pointing at some sort of program (such as a CGI script or mod_perl instance) combined with URI query parameters to specify the operation required, user authentication information, request details, etc. Many programming environments have built in support for accessing URIs and so these RESTful APIs can reuse existing technologies without needing new, complex and potentially buggy libraries. Generating the URIs is relatively simple and straight forward in most languages.

The results of a REST transaction can be anything you like, so there was not reason why it couldn’t be the sort of XML result documents that we looked at originally. This gave the result some platform neutrality, whilst still allowing us to return what were effectively dynamic data structures. It also means that you can easily do funky things with XSLT still if you want to. The clients can process XML returned fairly easily these days (as evidenced by the AJAX mash ups out there) but they wouldn’t need to go through the overhead of generating a new XML document for the outgoing request itself (unless it was required for a complex parameter perhaps – that’s flexibility for you!).

RESTful APIs also have a big conceptual win over a SOAP based API: the interface gets real URLs for each request. This means that not only get you retrieve object details using a suitably crafted URI, but also create, update and delete them (assuming you have rights to obviously). Having such URIs available means that the other parts of the W3C’s web standards development dovetail in nicely with RESTful APIs: things like RDF and XPointer only really become useful if they can reference resources using URIs.

Having chosen (for the moment!) REST as API mechanism there appeared to be one decision still outstanding. That is where to draw the line between the “base” URI and the parameters. One option is to have a single base URI for all operations and then a parameter (amongst others) that describes the operation we required. For example a FindSuid call might look something like:

http://example.com/cgi-bin/LUMP?operation=findsuid&what=Module&aspect=Module%20Code&value=08LBA100

The other option is to have different base URIs for each of the “methods” that the API has. For example the base URI for the above operation might be:

http://example.com/cgi-bin/LUMP/FindSuid

and then we’d add parameters to the end of that, giving a URI of:

http://example.com/cgi-bin/LUMP/FindSuid?what=Module&aspect=Module%20Code&value=08LBA100

There’s not really much in it as far as we can see at the moment. On the one hand having a single base URI might mean that the client’s configuration is very, very slightly simpler. On the flip side having separate URIs might translate to having lots of small, simple CGI scripts, as opposed to a single monolithic script. The small scripts might or might not be easier to maintain, depending on how much code reuse and modularisation we can come up with. Also if we use something like mod_perl with the scripts to speed things up, the smaller units will hog less memory in the web server, take less to get running and can be updated with less impact on a running service.

Either of these would appear to be RESTful as they both result in workable URIs. Luckily we can have our cake and eat it in this case. If we opt for individual URIs for each method then we can take advantage of the back end benefits, but also then use existing web technologies to make them appear as a single monolithic interface with a single base URI and an “operation” parameter if we wish to. This could either be through a wrapper CGI script or one of the web server URL rewriting technologies (though Apache may have issues with rewriting parameters).

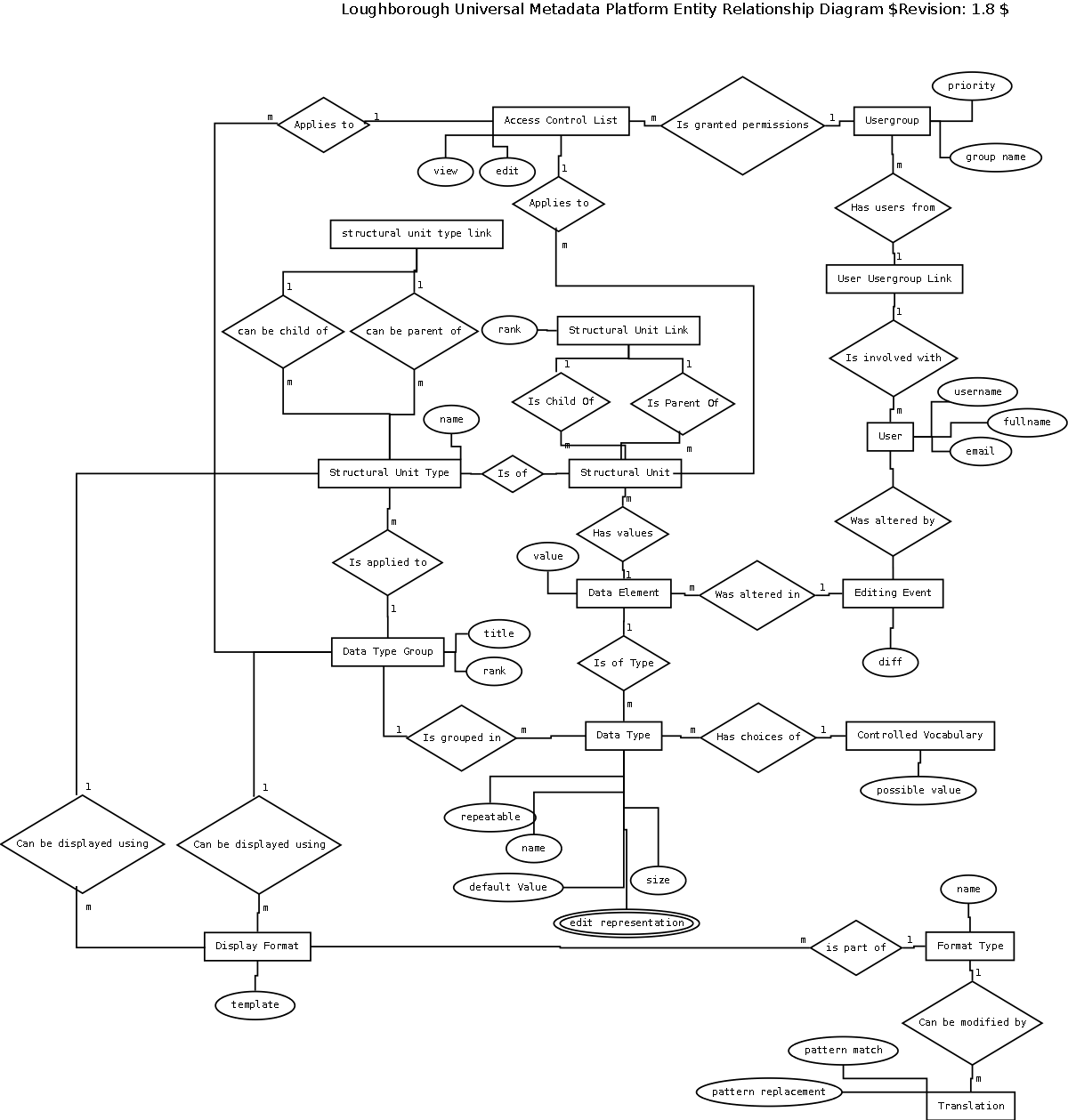

Data Structure Design

With LUMP what we’d like to achieve is a far more flexible database design than the previous versions of the LORLS reading list system. Traditionally we’ve modeled the database structure and the resulting relations on a fairly restricted model of book like objects and also had to work round limitations in the way reading lists were used. For example at Loughborough we were initially told that a module would have a single reading list. This proved to not always be true – sometimes a module would be taught by more than one lecturer and so we had to introduce the “bodge” of sub-lists linked to the main one. As other institutions used LORLS we also found that our way of linking reading lists to modules to courses to departments did not always match up with other organisations’ structures.

So we thought it was time for a rethink. We wondered if it was possible to come up with a generic data container that could be a reading list item, a reading list itself, a module, a course, a department, a faculty or indeed anything you like. We called these containers “structural units” and decided that they could contain metadata and/or other structural units. The more we thought about structural units, the more we realised that a generic concept such as this could allow great flexibility in a reading list system, and possibly also form the basis for other systems.

However it was also clear that we needed more than just the structural units themselves. For one thing it was felt to be advantageous to all structural units to be grouped by type, such as “reading list”, “module”, “department”, “book”, “video”, “3d holographic projection”, etc. The typing system would allow a set of custom metadata elements to be defined that would be available to all structural units of the given type. For example a “department” structural unit might have metadata of a department name, a URL pointing to a departmental website and another URL pointing to a departmental logo. A “book” on the other hand would have the normal bibliographic and holding information metadata that we have used in LORLS for some time.

Each piece of metadata itself has a type, and a value. The value is the actual piece of metadata (“Andrew S. Tanenbaum”, or “Computer Networking”, or “Prentice-Hall”, etc for a book for example). The data type on the other hand tells us information about whether this piece of metadata is repeatable or not (you might only allow one record of holding information but allow any number of authors for example), where it is ranked in the output, and how it is to be represented for editing (string, integer, date, etc). The data type can also be linked to a controlled vocabulary if required to limit the choices available for associated data values.

Structural unit types could also be linked to one another as parents and children, so that we could enforce certain heirarchical or mesh layouts on structural units of given types. For instance a structural unit type of “department” is a child of a type called “faculty”, so only structural units of type “faculty” can be a parent of a “department” typed structural unit.

The data structure also need to encompass information about the ownership of structural units, and the permissions users had to access and/or alter them. Users could be part of one or more usergroups, and the groups have access controls applied to them for access both to structural units themselves and also editing sections (the name of which was hotly debated for several weeks but which is currently “Data Type Groups”!). The editing sections allow us to group data types that are “similar” together, thereby allowing such things as tabbed editing for a single structural unit, with each tab holding a different sort of metadata (holdings, bibliographic, etc).

We wanted to allow structural units to be rendered in a variety of forms (HTML, BibTeX, RefWorks, etc) and had to decide whether this was a client or server side task. We decided on doing it on the server side as it meant that a single set of code at the server could then produce output for a larger number of simpler clients. It also meant that new or changed output formats only required changes to be made once at the server end, rather than in all the clients. For flexibility we could still return structural unit information to clients in “raw” XML so that they could process it themselves, or to allow them to provide editing features.

The current database ER diagram is :