Reading Lists: Friend or Foe?

The title above refers to a half day WESLINK event on reading lists which I was lucky enough to be invited to on Wednesday. The event was hosted at Keele (big shout out to my SatNav for finding the place). It started with a buffet lunch, followed by presentations from three institutions on how they currently manage their reading/resource lists:

- Spreadsheets at Keele University

- Talis List at the University of Birmingham

- LORLS at Loughborough University (by yours truly).

The day finished with a group discussion. One of the main things I picked up from this was the differences in approach as to who edits the reading lists. Some sites were strongly in favour of the library being responsible to all editing of online reading lists. At Loughborough we’ve always been keen for academics to edit the lists themselves, after all they are the intellectual creators of the lists.

It also seems very inefficient for the library to re-enter information into a system when the academics are most likely already doing this (even if they are just using Word). Ideally if the information can be entered just once, by the academic into the online system, this should free up time for all concerned: library staff not acting as data entry clerks and academics not having to check others interpretation of their work.

However in order for this to happen the system needs to be as easy as possible for the academic to use. This is why as part of our LORLS we’ve been developing BibGrab.

Access Control Lists

Been head scratching about access control lists, which have proved more complicated than originally anticipated. The trouble comes from working out how to do inheritence (so that we don’t need thousands of similar ACLs differing only in their SUID) and also how to set the initial ACLs for a SU that has been newly created.

Current thinking is as follows:

The existing access_control_list table will be left as is, but will be used in a slightly different way. To find out if a user has rights to something, we’ll first search through the table for all rows that contain a user group that the user is in that has the maximum priority. The guest usergroup has a priority of 0, general registered users 1, module support staff 170, module tutors 180, module library assistance 190, module librarians 200 and sysadmins 255.

If no rows match, game over, the user doesn’t have any sort of access. If rows are returned, we then look at the SUID field, and potentially the DTG_ID field if we’ve had one specified. Rows with no SUID or DTG_ID field are the lowest prescidence, then ones with just a SUID provided, then ones with SUID and DTIG_ID at the top. At this point we can then look at the view and edit fields to see what folk are allowed to do with them.

We might also need to allow folk to edit ACLs. We’ll let them edit ACLs of any group with a lower priority than the one that they are in and that mentions a SUID and/or DTG_ID that they have edit rights to. The only exception is Sysadmins – we can edit our own ACLs and can also edit ACLs that have a NULL (ie wildcard) SUID or DTG_ID. We will also disallow new ACLs to be created that have the same <usergroup_id, su_id, dtg_id> triple so that we don’t have two rules with different edit/view options at the end of the access validation algorithm.

When a new SU is created, we’ll need to add some rights to it. These initial rights will have user groups inherited from the parent SU, but this is where things get tricky: as we’ve discovered we can’t just copy the ACLs, because child SUs will in general be of a different SUT than their parents.

To get round this we’ve proposed two new tables. The first of these is “acl_defaults”. This links user group priorities with SUT_IDs, DTG_IDs and default “view” and “edit” settings. The second table is less important and more for UI “gloss” when creating/editing default ACLs – it is merely a list of priorities (as its ID field) and a name – so that we know that (for example) priority 170 is assigned to user groups for Support Staff.

The process when a new SU is created is as follows: for each usergroup that has an ACL in the parent SU take that usergroup’s priority field and then look up the row(s) in the acl_defaults table that match that combined with the SUT_ID for the new SU. These rows are then used to create new rows in the access_control_list table with the specific SUID for the new SU filled in (and if specified in acl_defaults, the value for DTG_ID as well).

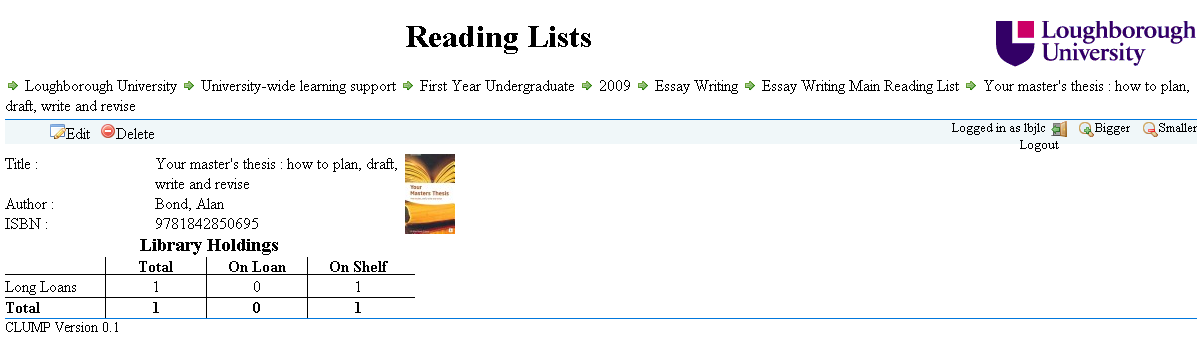

Showing Current Availability Of Items

I have been working on getting CLUMP to pull information out of our LMS (Aleph) so that users can easily see which books from reading lists are currently available in the Library. The result of the work is shown in the following screen shot.

The JavaScript in CLUMP has to make some AJAX requests to Aleph’s X-Server to pull out the information. This required the need for another proxy script to be present on the same host as CLUMP to get around JavaScript’s security model. One advantage of using a proxy is that you can put the username and password for your X-Server account in there and not give it to every user, not that they should be able to access any more information with it than the default X-Server account. Always best to be careful though.

As we are trying to make sure that LORLS isn’t tied specifically to any one LMS the JavaScript used to pull out the current stock status is in a file on its own. This file can be replaced with ones that contain code for getting the same information from other LMS’s.

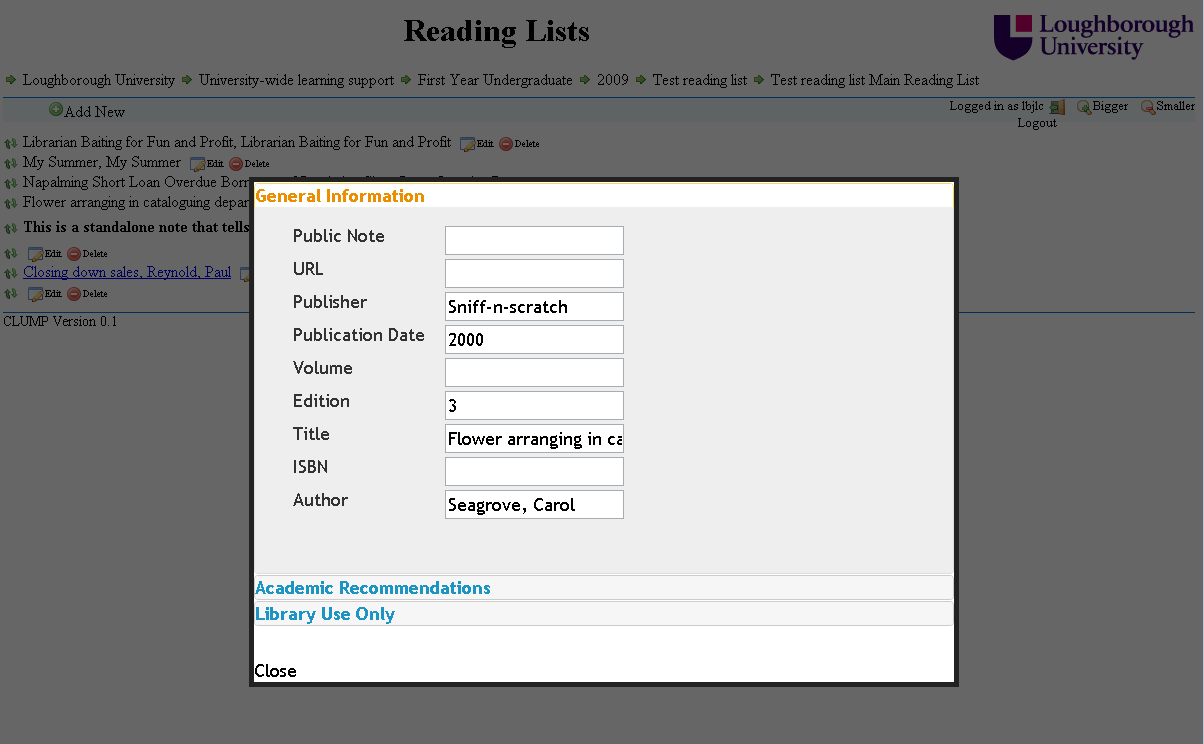

Editing Via CLUMP

CLUMP has been progressing nicely this past month and we have now got it displaying items ready for editing. For this it first required the ability to login users, as the guest user hasn’t been given permissions to edit anything. Once logged in the user is then presented with the options to edit/delete items that they have permissions to edit.

Another new feature is the ability to reorder lists using drag and drop. The reorder APIs aren’t implemented yet in LUMP though but once they are the code is all in place in CLUMP and so should be quite easy to implement.

My First Real Client

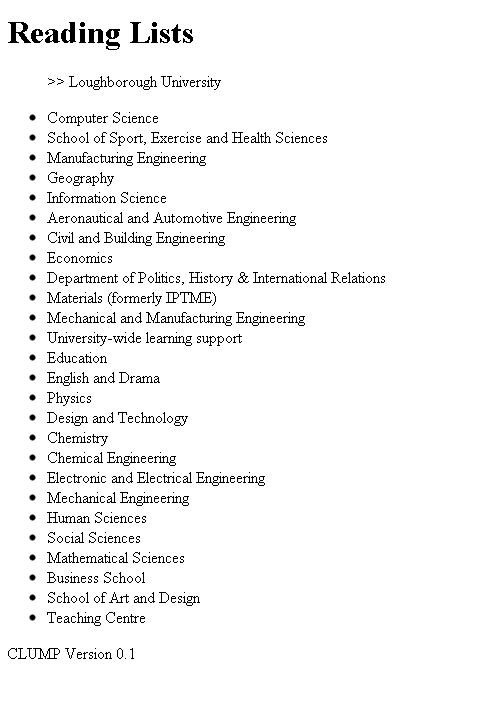

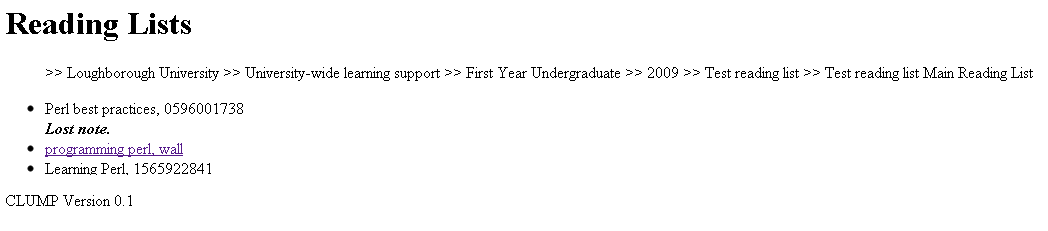

Well today I have got the first version of CLUMP (Client for LUMP) at a stage where I felt it was actually usable. Currently it only displays entries visible to the guest user. All the data is pulled into CLUMP using AJAX.

Here is what a sample reading list currently looks like.

Here is screen shot of an individual item entry of a reading list.

As you can see it is functional an not very graphical, though there is the book covers from Google books being displayed at the item level if it is available. I am sure that over time this will become much more aesthetically pleasing and start to look more like 2010 than 1995.

Client Performance

In the last month we’ve been getting to grips with client side coding. This has thrown up a few XML API calls that we decided we wanted or needed tweaking (such as checking authentications without returning anything from the database, listing all the structural unit types available and allowing substring searches in FindSUID). It had also given us pause for thought on the performance of the system.

The old LORLS database was pretty lean and mean: the front end code knows about the structure of the data in the database and there’s relatively few tables to have to handle. LUMP is more complex and thus more searches have to be done. Also by having an XML API we’re that bit more removed and there’s more middle ware code to run. We could just say “hang it” and ignore the XML API for a CGI based client which might speed things up. However we’ve been trying to “eat our own dogfood” and use the APIs we’re making available for others.

Some of the speed hacks for the imports won’t work with the clients – for example the CGI scripts that implement the XML API are short lived so the caching hacks wouldn’t work, even if the clients all did tend to ask for the same things (which will in fact be the case for things like the Institutation, Faculty, Department, etc information). One avenue that we could persue to help with this is mod_perl in Apache, so that the CGI scripts are turned into much longer lived mod_perl scripts that could then benefit from caching.

We’ve currently ended up with a sort of three pronged approach to clients code:

- Jimll is writing a CGI based LUMP client that makes minimal use of JavaScript (mostly for the Google Book Search fancy pants bit really),

- Jason has a basic PHP Moodle client to demonstrate embeddablity,

- Jason is also writing a AJAXy client side tool.

All of these use the XML API. I guess we should also have a non-XML API client as a bench mark as well – the CGI based LUMP client could do that relaively easily in fact. Something to think about if performance really does turn out to be a dog.

We’ve also been considering the usefulness of the Display Format stuff held in the database. The CGI based LUMP client and the PHP Moodle client both use this. However the AJAX client retrieves raw XML documents from the server and then renders them itself. This might be a bit faster, but it does mean that the client becomes tied to the structure of data in the database (ie add a new Structural Unit Type and you might need to dick about with your client code(s) as well).

How Best To Debug JavaScript.

While starting to look at creating an AJAX client for LUMP I have come up against the old problem of how best to debug the code. Depending on the browser being used it debugging can be very easy or very difficult. FireFox, Google Chrome, etc. all give access to JavaScript consoles either natively or via a plug-in like FireBug. The problem comes with Internet Explorer (IE) that is very weak in the debuging side of things.

There is FireBug Lite which is a cut down version of FireBug that you can add into the web page HTML, the problem with this is that also then appears on your other browsers and sometimes seems to cause problems with them. I don’t want to have code in my client that say if you have this browser then do this and if you have that browser do that as it makes it difficult the maintain.

The solution that I am using is the following bit of code at the start

if(typeof(console)=='undefined')

{

console={

log: function (x) {alert(x);},

warn: function (x) {alert(x);}

};

}

Quite simply it looks to see if there is a console object available and if not it creates a simple one which supports log and warn, the two debugging statements that I use most. If there isn’t a console to log to all log and warn messages will appear as alerts to the user. This can be annoying in IE but it is usually only IE specific problems that I am trying to debug in IE.

Speeding up the imports

A while since the last update and that’s mostly because we’ve been banging our heads against a speed issue for the import of the old LORLSv5 reading lists into LUMP.

The first cut of the importer seemed to work but had barfed due to lack of disc space on my workstation before completing the run. For dev/testing the rest of the API that was fine though as we had enough data to play with. It was only when we installed a new virtual hosting server and I created myself a dedicated test virtual server with oodles of RAM and disc space that we discovered that the import would work… but it would take around two weeks to do four years worth of data. Ah, not good. Especially as the virtual host is supposed to be relatively big and fast (OK its a virtual server so we can’t count spindles directly as the filesystem is stuffed inside another file on the RAID array on the host, but its should still be plenty fast enough for LUMP to run, otherwise folk with older server hardware are going to be stuffed).

We’ve tried a number of different options to help sort this out. These have included:

- Search caching in Perl

- Tweaking the BaseSQL module to allow a Perl hash based cache to be turned on and off (and the status of caching checked). This is then used by some of the higher layer modules that encapsulate a few of the tables (StructuralUnit, DataElement, DataType, DataTypeGroup) to see if a search matches a previous search and, if caching is turned on, returns the results immediately from the Perl hash without hitting the database. Any updates on the table in question invalidate the cache. Reading the cached copy is much faster than accessing the database and so this can be a big win, especially on tables where there are relatively infrequent updates. Unforunately we do quite a bit of updating on StructuralUnit and DataElement tables.

- A reload() method

- Quite a lot of the time we create new Perl objects on a database table to do (for example) a search and then later have to do another new() on the same object to instantiate it with an existing row from the table (based on the id field). Every new() method reinterrogates the database to find out the fields for the table concerned and then recreates the Perl object from scratch. However the fields are unlikely to change from call to call (certainly during an import) so this is just wasted time. A reload() method has been added so that you can instantiate the object from a known row in the database via the id field without having the whole Perl object regenerated or the data queried for the fields available. This results in a slight but noticeable saving.

- Adding noatime and nodiratime mount options

- Normally on a Linux ext3 filesystem (which is what we’re running), the access time of files and directories are updated each time they are accessed. Of course that means that every SQL SELECT is effectively also a write on the filesytems. The noatime and nodirtime directives to mount (slipped into /etc/fstab) turn this behaviour off. You don’t even have to reboot for it to come into effect – the mount -oremount / command is your friend! This should remove another disk related bottleneck during the import.

- Tweaking the ACL CopyRights() method

- Every time a row is inserted into the structural_unit table for a new SU, several rows get stuck into the data_element and access_control_list tables. Looking at the latter, during the import many of these rows are created as a result of the CopyRights() method on the AccessControlList Perl object. This method allows several fancy options such as overwriting existing ACLs and cascading ACLs from a parent to all its children. Neither of these directly apply in the case of building a new SU and copying the access rights from its direct parent, yet we still had to do a load of SQL to support them. Therefore a new parameter called “new” was added to the method to indicated that the ACLs were being copied for a new SU, which allowed some short cutting. One part of this sort cutting was to use a single INSERT INTO...SELECT FROM... SQL construct. This should be fast because it is just copying internally in the database engine (using a temporary table as both the source and target tables are the same in our case) and doesn’t need to have results send to/from the Perl script. This appears to be quite a big win – performance with this and the previous two tweaks now hits 1000+ SUs and associated data being created every 10 minutes or so.

Whilst these tweaks look to be making bulk importing data from LORLSv5 more manageable, it remains to be seen if they ensure that the performance of the interactive editing and access acceptable. Hopefully now I can get back to making a non-Moodle front end and we’ll see!

Second meeting with Talis

When I met with Mark and Ian from Talis back in January they’d suggested hosting a follow-up meeting later in the year. So yesterday when I went to visit them I was expecting it to be somewhat of a “blast from the past” what with me being an ex Talis customer. But everything was different: new offices, lots of new staff and lots of new ideas.

Ian played host and introduced me to Chris, a lead developer for Talis Aspire who went on to give me a demonstration of the system which I must admit is very impressive. I wasn’t able to reciprocate with an online demo of LORLS as we haven’t yet knocked any holes in our institutional firewall to allow external access to our development server. However, I was able to show Chris and Ian some screenshots.

One thing I noted at our first meeting was the similarities between our two systems. This became even more evident after I showed Chris a simplified E-R model of our data design as he went on the say that apart from the entities relating to access control it was basically the same as theirs. Hopefully this means that “great minds think alike” and we’re both on the right track.

After the meeting I met up briefly with Richard Wallis (one of the few faces I recognise from the old days) who went on to explain about his Juice Project. This is in effect a piece of middleware that can sit between your website and various external resources. The benefit being that instead of everyone writing their own method to access the resource you can instead use someone else’s code that already does it. This sounds like a great idea and one I think we should consider using for LORLS.