When to implement?

LORLS v6 (aka LUMP + CLUMP) is now almost at a stage where we can consider going live with it here at Loughborough. Unfortunately we’re too late to launch at the start of the Summer vacation as we need time to fully advertise and train staff on the new system. That means we’ll probably launch the system at the start of the new academic year (October), Christmas or in time for the second semester (February 2011). We’re currently consulting with academic departments and library staff on when they’d prefer and are getting a strong steer that Christmas would be least disruptive for all concerned.

In the meantime we’ll obviously continue to develop and test the system. Alongside this we’re looking to create a sandbox so that staff can play on (and learn about) the system before the official launch – whenever that will be.

Speeding things up (or at least trying to)

Jason has been doing a load of work in the last few months on getting the CLUMP AJAX and its looking rather nice. However Gary and Jason had decided that on longer reading list the performance wasn’t good enough. A 635 item list took over 3 minutes to completely render when Jason pulled the Reading List SU and then did separate XML API calls to retrieve each individual item (the advantage of this over just using a GetStructuralUnit API call with multiple levels is that the users could be presented with some of the data asynchronously as it arrived, rather than having to wait for the back end to process the whole lot).

So the question was: could it be made faster? One option was trying to create a single “mega” SQL select to get the data but that could almost immediately be discounted as we pull different bits of data from different tables for different things in GetStructuralUnit (ie the child SU info and SU/SUT basic info couldn’t easily be munged in with the data element/data type/data type group stuff). So we did two separate selects to get the basic data, ignoring the ACL stuff. These were both subsecond responses on the MySQL server.

Now obviously turning the SQL results into XML has a bit of an overhead, as does the client-server comms, but nowhere near enough to cause this slow down. This pointed the finger firmly in the direction of the ACLs. Every time we pull an SU (or its children) we need to do a load of ACL checks to make sure that the user requesting the data is allowed to see/edit the SU and/or the data type group. When we added ACLs back into the new “fast” XML API the 635 element list took just under two minutes to render. So we’d shaved a third off the time by crafting the SQL rather than using the Perl objects behind the XML API, but it was still a bit slow.

Gary then came up with a bright idea: why not allow FastGetStructuralUnit (the new whizzy version of the GetStructuralUnit XML API CGI script) to accept more than one SU ID at once? That way Jason’s CLUMP AJAX front end could request the outer reading list SU quickly, and then fill in the items but do them in blocks of several at once. We implemented this and had a play around with different numbers of items in the blocks. Five seemed quite good – this was fast enought to fill in the first screenful to keep the user occupied and managed to get the whole 635 item list rendered in the browser in just over a minute – two thirds of the original time.

Jason can now also try out more advanced ideas in the future, such as dynamically altering the number of items requested in the blocks based on response time and whether the user is scroll down to them or not. With lists under 100 items we’re getting a sub-10 second rendering time, so that’s hopefully going to be fast enough for the majority of users… and may even encourage some academics with long and unwieldy reading lists to split them up in to smaller sub lists.

BibGrab

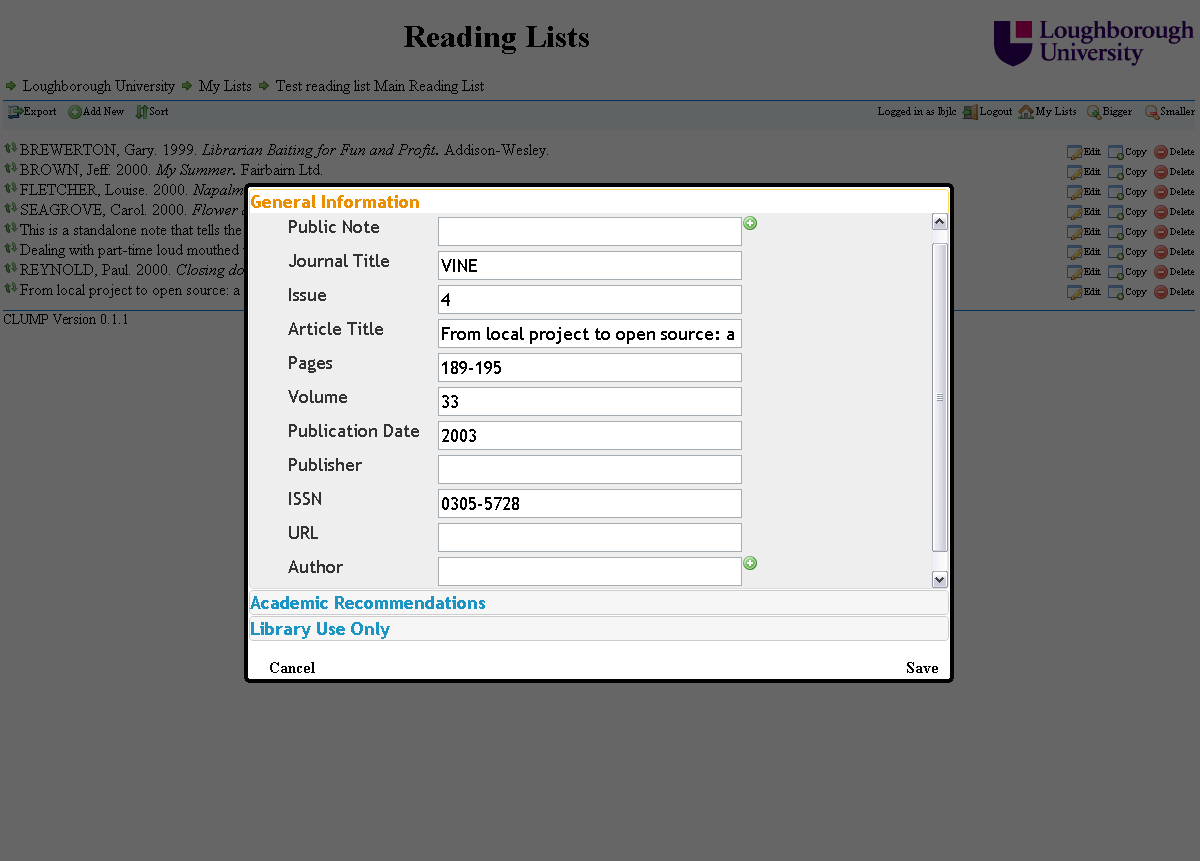

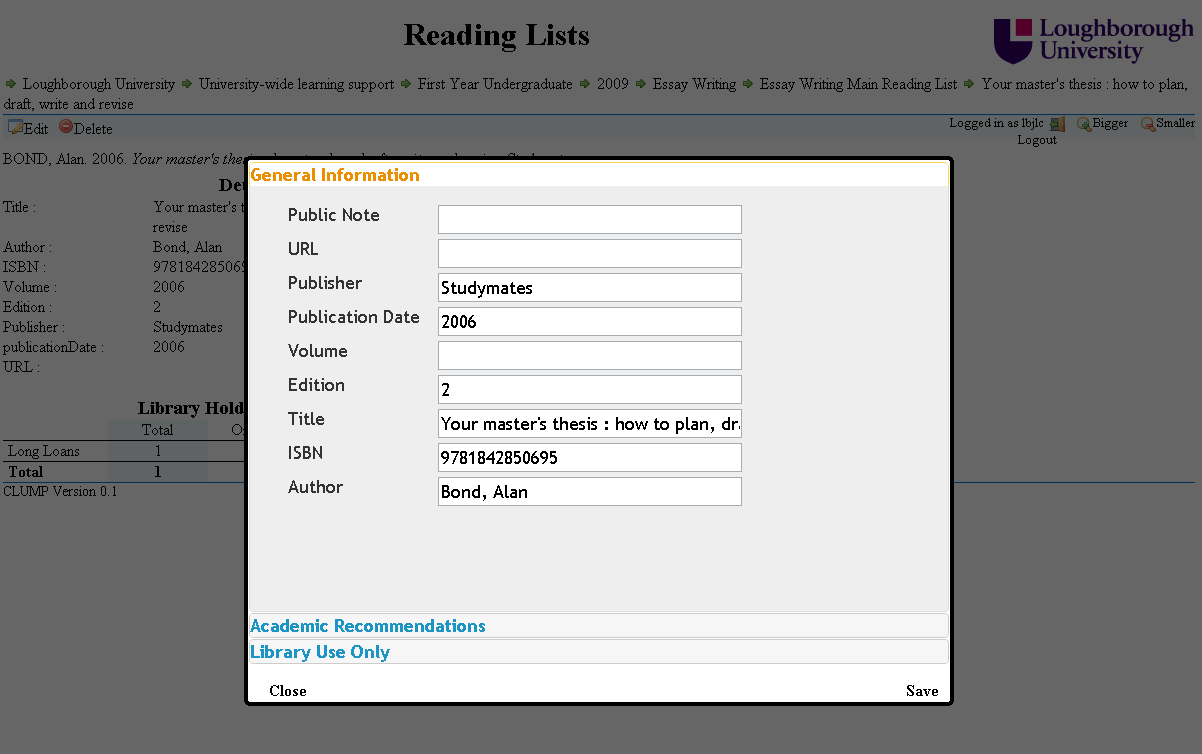

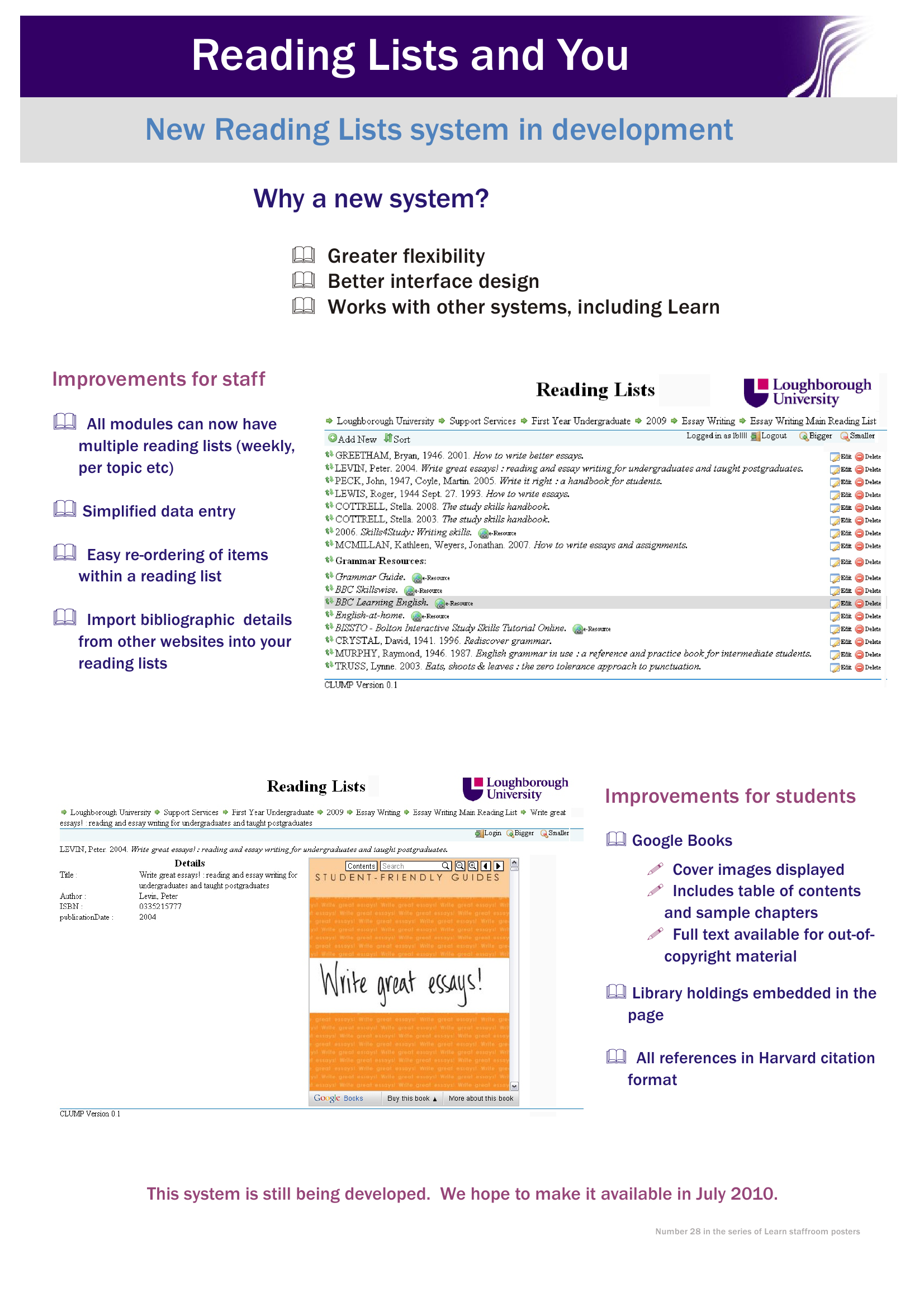

For a long time we have been told that staff want an easy way to add an item to a reading list. To make item entry easier the data entry forms for LORLS v6 are specific to the type of item being added. This should help avoid confusion when people are asked for irrelevant metadata (e.g. asking for an ISBN for a web page).

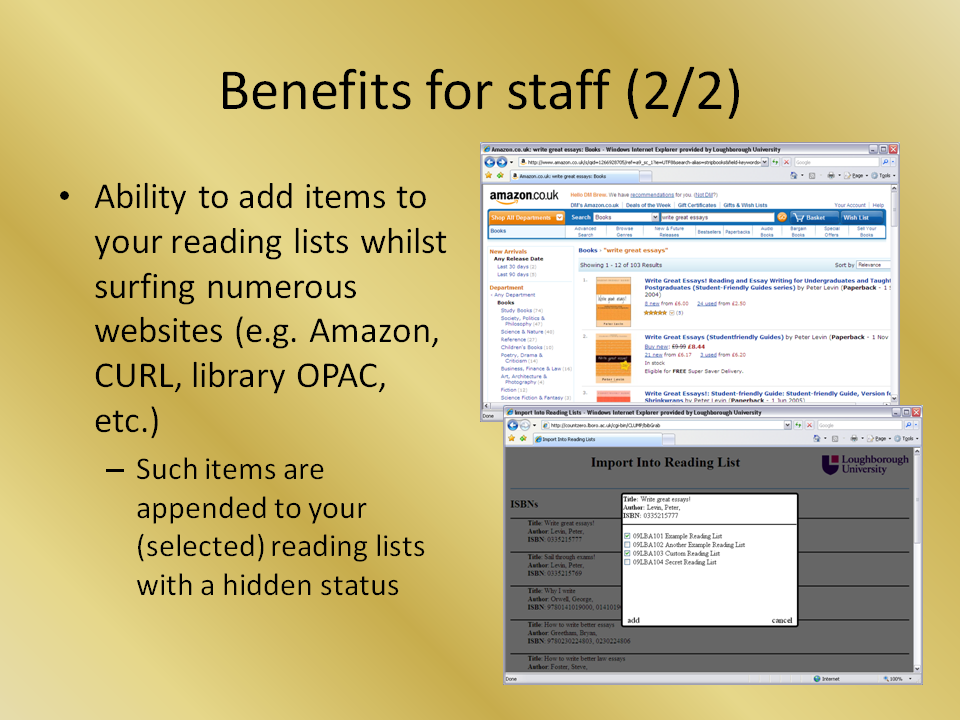

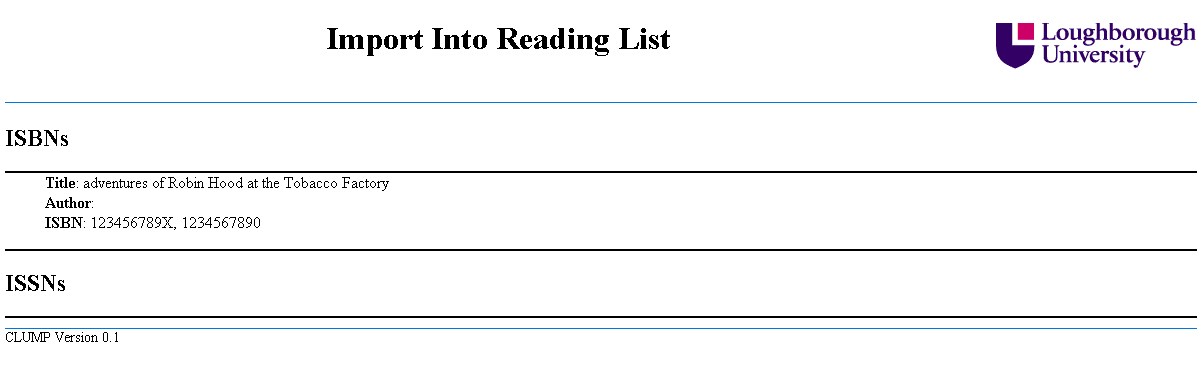

Recently I have been working on BibGrab our tool to allow staff to add items to their reading list from any web page that has an ISBN or ISSN on it. BibGrab consists of two parts. The first part is a piece of JavaScript that is add as bookmark to their browser, then when they select that bookmark in future the JavaScript is run with access to the current page. The second part is a CGI script that sits along side CLUMP that processes the information and presents the options to the users.

The bookmark JavaScript code first decides what the user is wanting it to work with. If the user has selected some text on the page then it works with that otherwise it will use the whole page, this helps if there are lot of ISBNs/ISSNs on the page and the user is only interested in one of them.

It then prepends to that the current pages URL and title, which lets BibGrab offer the option of adding the web page to a reading list as well as any ISBNs/ISSNs found. This information is then used to populate a form that it appends to the current page. The form’s target is set to ‘_blank’ to open a new window and the action of the form is set to the CGI script. Finally the JavaScript submits the form.

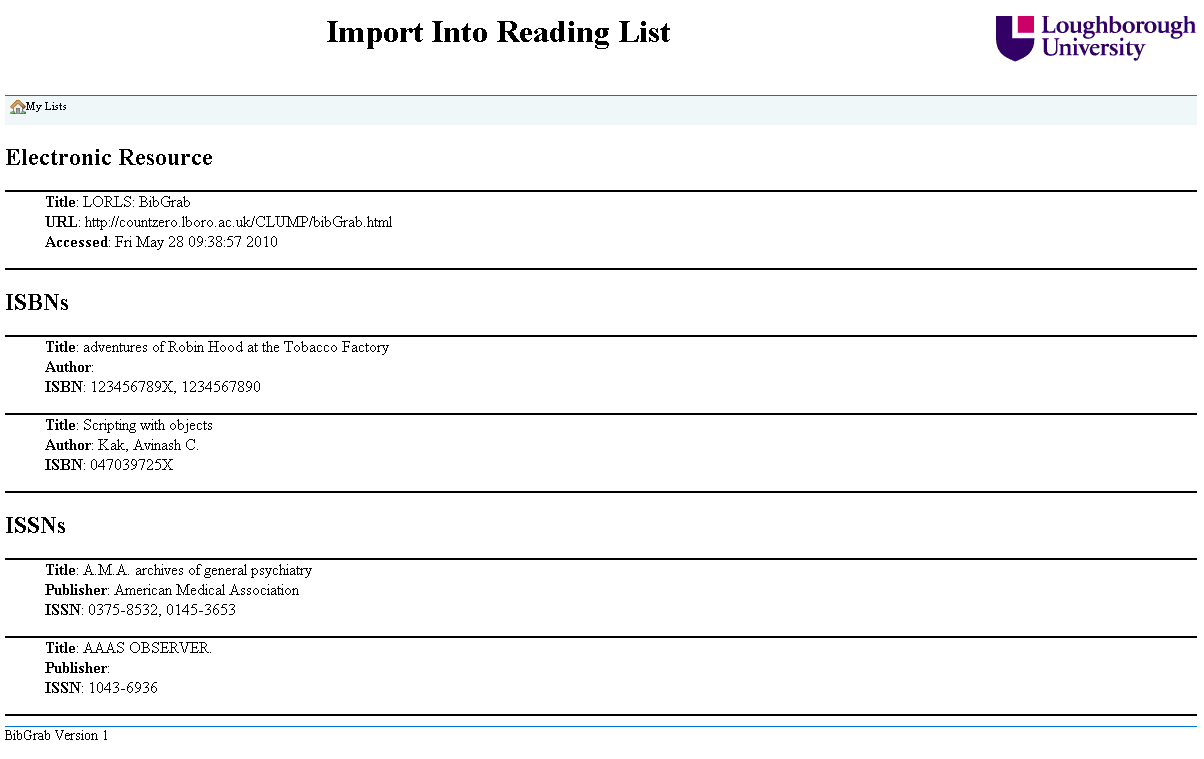

The CGI script takes the input from the form and then searches out the web page details the JavaScript added and any possible ISBNs and ISSNs. The ISBNs and ISSNs then have their checkdigit validated and any that fail are rejected. The remaining details are then used to put together a web page, that uses JavaScript to lookup the details for each ISBN and ISSN and display these to the user. The web page requires the user to be logged in, as it is using CLUMP’s JavaScript functions for a lot of the work it can see if they have already logged into CLUMP that session and if they haven’t it can then ask them to login.

Once logged in they can see all the items that BibGrab found.

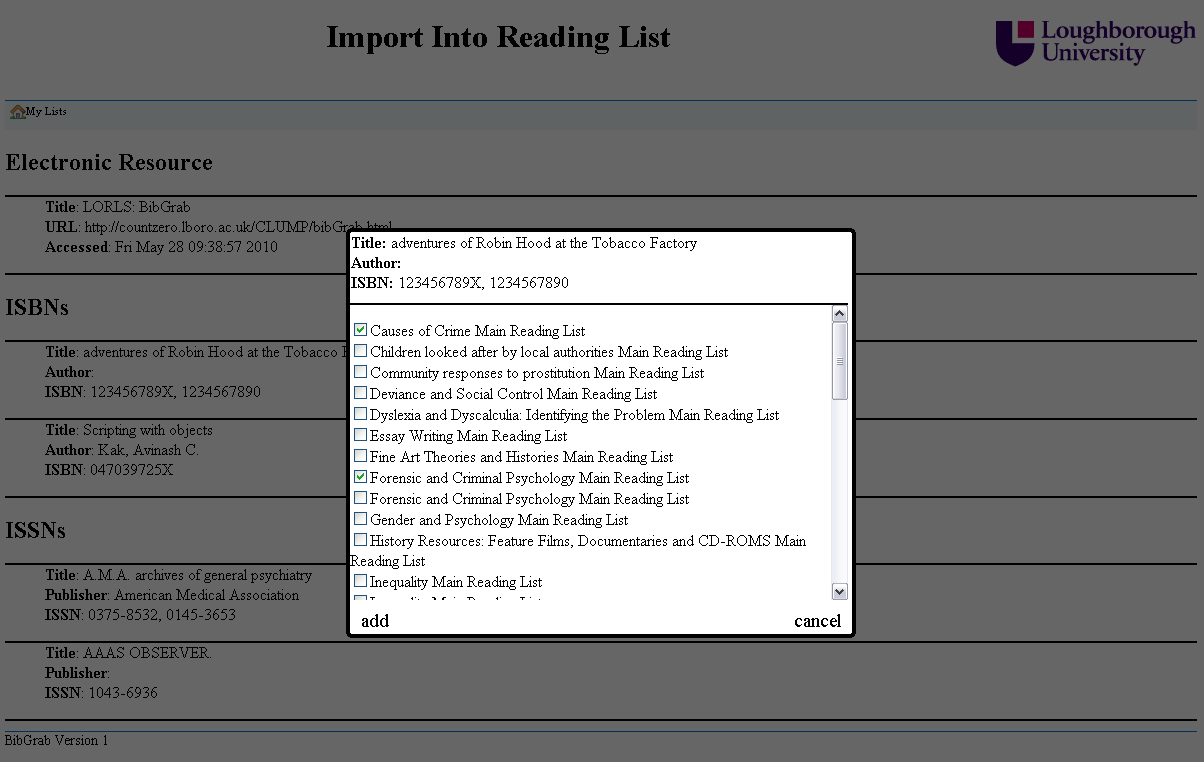

When they select an item they are then presented with all the details for that item and if it is a journal they are also presented with some boxes for adding in details to specify a specific article, issue or volume. They are also presented with a list of their reading lists, of which they can select as many as they like and when they click add the item is added to all their selected reading lists. The item is added to the end of each reading lists and is in a draft mode. This makes it easier for people to add items to their reading lists when they find them without worrying how it will affect their list’s layout.

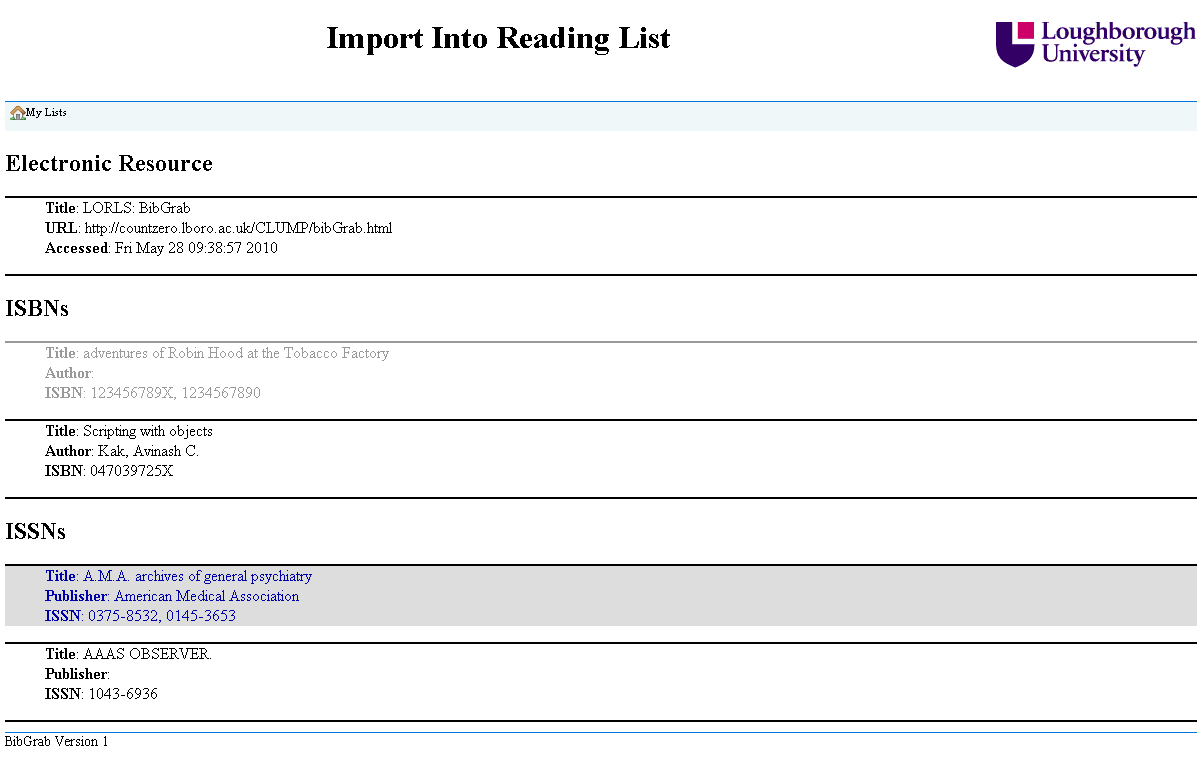

After the item has been added to their chosen reading lists it is grayed out as a visual indication that it has already been used. They can still select it again and add it to even more reading lists if they want or they can select another item to add to their reading lists.

Presentation to Users Committee

Demoing to library staff

Today we held a demo of LORLS v6/CLUMP for any and all interested library staff. Previously we’d only demoed it to a small focus group of library staff and those few academics that came to the e-Learning showcase. Reactions to the system seemed pretty positive although it did highlight that we still have a long way to go as the staff gave us a long list of “must have” additional features. These include:

- Alert library staff to any changes made to reading lists

- Logo for the Service (other than just the words “Online Reading Lists”)

- Can the data be cleaned up? For example remove dates from authors

- Include classification/shelfmark on full record

- Change colour of links when you hover over them

- Need to think about terminology for hide/unhide option

- Useful to have number against items on long lists – these are often used when liaising with academics

- Have an alternative to drag and drop re-ranking for large lists

- Draft items on lists should be greyed out

- Option to publish all draft items on a list at once

Another Debugging Tip

As we reach the stage where we will be demoing LORLS v6 more often I figured it was time to make my debugging code easy to switch off. This resulted in two new JavaScript functions debug and debugWarn. They both are wrappers that first check the global variable DEBUG and if it is set then they call the relevant method from the console object (either log or warn).

Now to switch of debug messages we simple set DEBUG to 0 and to switch them back on we set it to 1.

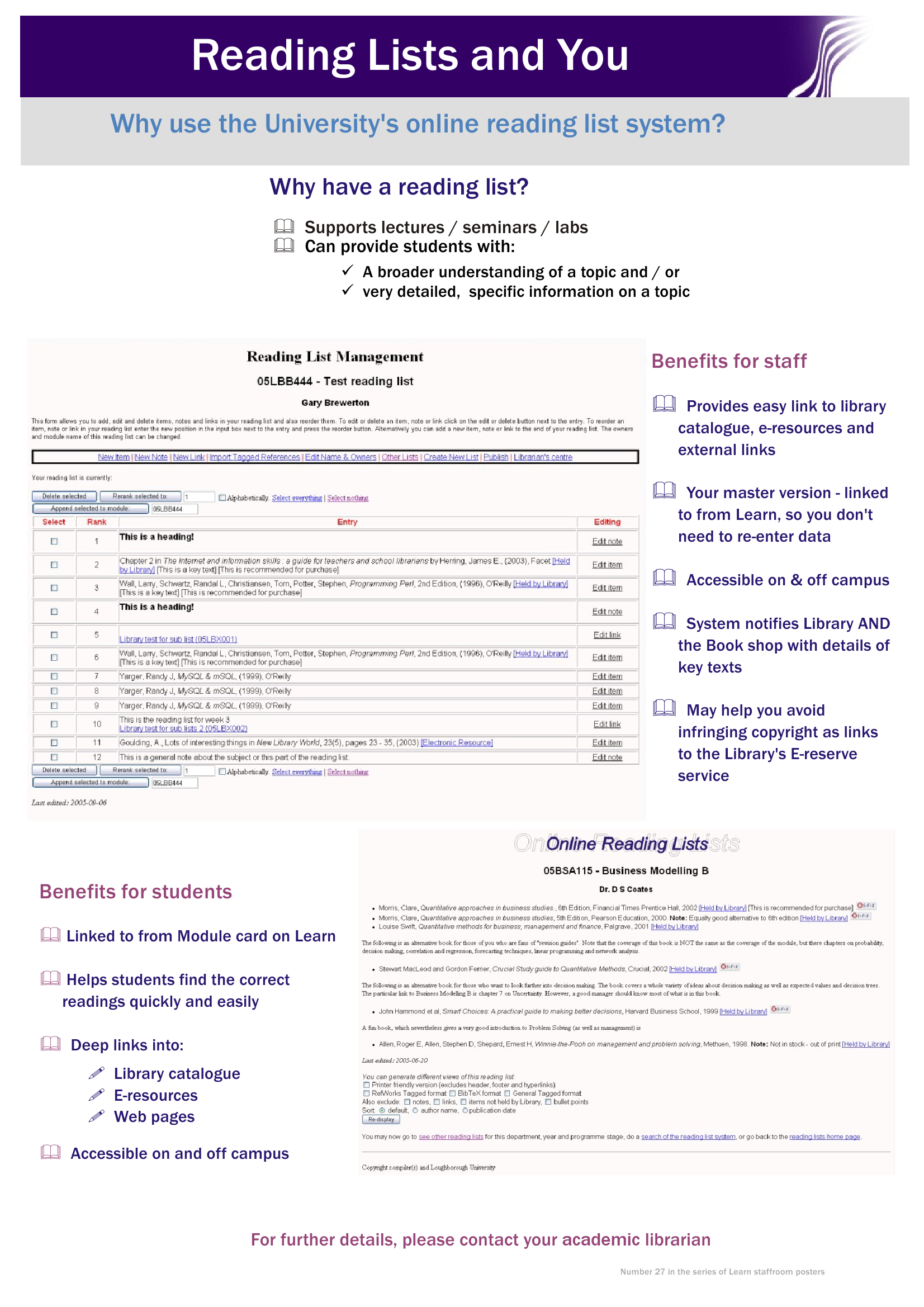

e-Learning Showcase

Spent a large part (11am-3pm) of today at an e-Learning showcase on campus. One of our Academic Librarians and I were there to demonstrate the new version of the reading list system to academics and support staff. Unfortunately the space provided for the poster session was far from ideal which meant that we got to meet very few people and more importantly was some distance away from the mince pies and mulled wine.

However whilst the quantity was low the quality of visitors was high. Of particular interest to the academics was the drag-and-drop reorder of lists and the ability to import bibliographic data from random websites. The latter being Jason’s new BibGrab tool.

Another positive from the event was the plate of mince pies I got for holding a couple of doors open for the catering staff when they were clearing up at the end. I must remember to share these with the rest of the team…

BibGrab Proof Of Concept

One tool on our list of things required for LORLS v6 is BibGrab for which we have just finished the proof of concept. It finds ISBNs and ISSNs from web pages and looks up their metadata on COPAC.

The main piece of functionality missing from the proof of concept is the ability to actually add an item to a reading list. As well adding this functionality we are also aiming to make it pick up details about the web page it was initiated on so that staff can add web pages to their reading lists.

While the current source to used to lookup metadata is hard coded, the release version should have a number of sources to it can use and an order of preference for them. This way the metadata will match that of the local LMS if it has a matching record and only try other sources if it doesn’t.

More ACL fun

After fiddling with ACLs for the last few weeks we had to reload the access_control_list table with the new style ACLs. Last week I’d written a “fixacls” perl script to do this and left it running. It was still running a week later when I came in this morning, and was only about half way through the >88K SU’s we’ve got. A quick back of the envelope calculation showed that with the new ACL format we’d be looking at north of 2 million ACLs for this many SUs.

So, time to find a way of speeding it up. I decided to try something I’d seen phpBB do: write all the imports for a table into a single SQL INSERT statement. This should then be able to read all data in and generate the index entries all in one go, which should be much faster than doing it row by row in individual SQL statements.

The ACL generation script took just over 6 hours (I didn’t time it exactly unfortunately, but it was ticking along at about 300000 ACLs per hour, with just over 2 million ACLs in total for the 88882 SUs we’ve got).

Reading this into the database was actually quite quick:

mysql> source /home/cojpk/LUMP/trunk/ImportScripts/acl.sql

Query OK, 2266017 rows affected (51.98 sec)

Records: 2266017 Duplicates: 0 Warnings: 0

Well, it was once I’d upped the max_packet_size in /etc/my.cnf to 100MB from 1MB! Looking promising now. A similar technique might be useful for a revamp of the LORLS import script – certainly the ACL fixup can now be run as part of that to cut down on time required. Still need to tweak the fixacls script to deal with LORLS reading lists that were marked as unpublished, but that should be a quick and easy hack.