Jason Cooper

This user hasn't shared any profile information

Posts by Jason Cooper

Extending the Structural Unit Types available

One additional Structural Unit Type (SUT) that we have been asked for is Audio Visual (AV) material, e.g. CDs, DVDs, Film, etc. While we’ve manually added an AV SUT to our local instance we didn’t have an easy way to extend this to other instances of LORLS.

So to tackle this we have put together a quick Perl script that can be run from the command line which adds in the new AV SUT. If your LORLS install doesn’t have an AV Material SUT and you would like to add it then here are the instructions to do so:

- Back up your LORLS install (Don’t forget the database as this will be altered)

- Download the latest extendSUTs script (e.g. wget “https://blog.lboro.ac.uk/lorls/wp-content/uploads/sites/3/2014/11/extendSUTs”)

- Make the script executable (e.g. chmod +x extendSUTs)

- Run the script (e.g. ./extendSUTs –database=<database> –user=<database user>)

- When prompted enter the database user’s password

- If the script fails due to missing the Term::ReadKey Perl module then install it and try the script again (RedHat/CentOS should just need to run “sudo yum install perl-TermReadKey”)

- Once the script has run open a new browser session and try adding a new AV Material entry to a test list.

Enhancing the look and feel of tables

Recently we have looked at improving some of the tables in CLUMP. At first we thought that it would involve quite a bit of work, but then we came across the DataTables jQuery plugin. After a couple of days of coding we’ve used it to enhance a number of tables on our development version of CLUMP. Key features that attracted us to it are:

Licencing

DataTables is made available under an MIT Licence, so it is very developer friendly.

Easy to apply to existing tables

As one of the many data sources that DataTables supports is the DOM you can use it to quickly enhance an existing table.

Pagination

Sometimes a long table can be unwieldy, with the pagination options in DataTables you can specify how many entries to show by default and how the next/previous page options should be presented to the user. Of Course you can disable the pagination to display the data in one full table.

Sortable columns

One of the most useful features DataTables has is its ability to allow the user to order the table by any column by simply clicking on that column’s header, an action that has become second nature to a lot of users. It’s also possible to provide custom sorting functions for columns if the standard sorting options don’t work for the data they contain.

Instant search

As users type their search terms into the search box DataTables hides table rows that don’t meet the current search criteria.

Extensible

There are a number of extensions available for DataTables that enhance its features, from allowing users to reorder the column by dragging their headers about, to adding the option for users to export the table to the clipboard or exporting it as a CSV, XLS or even a PDF.

Very Extensible

If you have some bespoke functionality required then you can use its plug-in Architecture to create your own plugin to meet it.

CLUMP Improvements

It has been a while since I posted any updates on improvements to CLUMP (the default interface to LORLS) and as we have just started testing the latest beta version, it seemed a good time to make a catch up post. So other than the Word export option, what other features have been introduced?

Advanced sort logic

With the addition of sub-headings in version 7 of LORLS it soon become obvious that we needed to improve the logic of our list sorting routines as they were no longer intuitive. Historically our list sorting routines have treated the list as one long list (as without sub-headings there was no consistent way to denote a subsection of a list other than using a sub-list).

The new sorting logic is to break the list into sections and then sort within each section. In addition to keeping the ordering of the subsections any note entries at the top of a section are considered to be sticky and as such won’t be sorted.

Finally if there are any note entries within the items to be sorted the user is warned that these will be sorted with the rest of the subsections entries.

Article suggestions

Another area that we have been investigating is suggestions to list owners for items they might want to consider for their lists. The first stage of this it the inclusion of a new question on the dashboard, “Are there any suggested items for this list?”. When the user clicks on this question they are shown a list of suggested articles based upon ExLibris’s bX recommender service.

To generate the article suggestions current articles on the list are taken and bX queried for recommendations. All of the returned suggestions are sorted so the at the more common recommendations are suggested first.

Default base for themes

CLUMP now has a set of basic styles and configurations that are used as the default options. These defaults are then over-ridden by the theme in use. This change was required to make the task of maintaining custom themes easier. Where previously, missing entries in a customised theme would have to be identified and updated by hand before the theme could be used, now those custom themes will work as any missing entries will fall back to using the system default.

The back button

One annoyance with CLUMP has been that due to being AJAX based the back button would take users to the page they were on before they started looking at the system rather than the Department, Module, List they were looking at previously. This annoyance has finally been removed by using the hash-ref part of the URL to identify the structural unit currently being viewed.

Every time the user views a new structural unit the hash-ref is updated with its ID. Instead of reloading the page when the user or browser changes the hash-ref (e.g. through clicking the back button) a JavaScript event is triggered. The handler attached to this event parses the hash-ref and extracts the structural unit ID which is used to display the relevant structural unit.

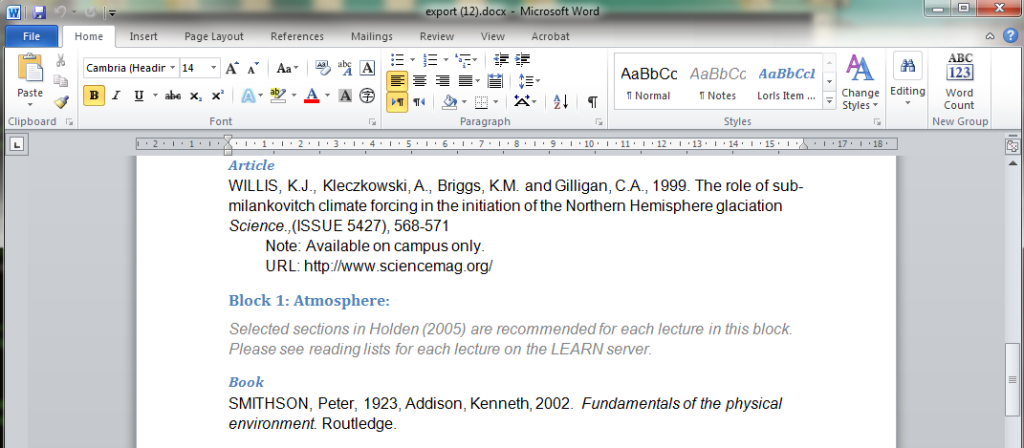

Exporting to a Word document

A new feature we have been working on in our development version of CLUMP, is the option for a list editor to export their reading list as a word document (specifically in a docx format). This will be particularly beneficial for academics extract a copy of their list in a suitable format for inclusion into a course/module handbook. A key requirement we had when developing it was that it should be easy to alter the styles used for headings, citations, notes, etc.

As previously mentioned by Jon the docx format is actually a zip file containing a group of XML files. The text content of a document is stored within the “w:body” element in the document.xml file. The style details are stored in another of the xml files. Styles and content being stored in separate files allows us to create a template.docx file in word, in which we define our styles. The export script then takes this template and populates it with the actual content.

When generating an export the script takes a copy of the template file, treats it as a zip file and extracts the document.xml file. Then it replaces the contents of the w:body element in that extracted file with our own xml before overwriting the old document.xml with our new one. Finally we then pass this docx file from the servers memory to the user. All of this process is done in memory which avoids the overheads associated with generating and handling temporary files.

To adjust the formatting of the styles in the template it can simply be loaded into Word, where the desired changes to the styles can be made. After it has been saved it can be uploaded to the server to replace its existing template.

Meeting the Reading List Challenge 2013 announced

The annual Meeting the Reading List Challenge event will be taking place at Loughborough again. The announcement about the free event can be seen below.

Meeting the Reading List Challenge

Keith Green Building, Loughborough University

Thursday 4th April 2013, 10:30am – 3:30pmThis free showcase event will highlight experiences from a number of institutions in their use and development of resource/reading list management systems. There will be five presentations (further details to follow) throughout the day. In addition there will be a buffet lunch provided during which there will be a suppliers’ exhibition.

This is a free event. If you would like to attend please email Gary Brewerton (g.p.brewerton@lboro.ac.uk) to reserve a place stating your name, institution and any specific dietary requirements.

More details of this year’s event and the results of previous years’ events can be seen on the Meeting the Reading List Challenge website.

Trouble installing? Disable SELinux

We are currently working on a new distribution of LORLS (That’s right version 7 is coming soon) and to test the installer’s ability to update an existing installation we needed a fresh v6 install to test on. So I dropped into a fresh virtual machine we have dedicated specifically to this kind of activity, downloaded the version 6 installer and ran through the installation only to find that, while it had created the database and loaded the initial test data just fine, it hadn’t installed any of the system files.

So for the next 3 hours I was scouring apache’s logs, checking the usual culprits for these sort of issues and debugging the code. One of the first things I did was check the SELinux configuration and it was set to permissive, which means that it doesn’t actually block anything just warns the user. This lead me to discount SELinux as the cause of the problem.

After 3 hours of debugging I finally reached the stage of having a test script that would work when run by a user but not when run by apache. The moment that I had this output I realised that while SELinux may be configured to be permissive, it will only pick up this change when the machine is restarted. So I manually tried disabling SELinunx (as root use the command ‘echo 0 > /selinux/enforce’) and then tried the installer again.

Needless to say the installer worked fine after this, so if you are installing LORLS and find that it doesn’t install the files check that SELinux is disabled.

A new reading list system added to the list of other systems

Today PTFS Europe announced their new reading list management system – Rebus:list. So we have added it to our list of other systems (alongside Telstar, Talis Aspire, etc.)

If anyone knows of any reading list managements systems not included in our list of other systems, then please let us know and we will add it to the list.

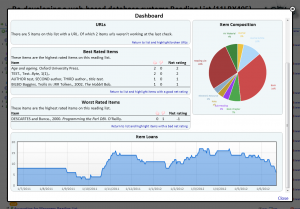

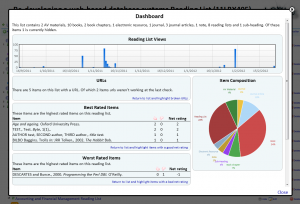

Academic dashboard in beta

One area of discussion at last year’s Meeting the reading list challenge workshop was around the sort of statistics that academics would like about their reading lists. Since then we have put the code in to log views of reading lists and other bits of information.

One area of discussion at last year’s Meeting the reading list challenge workshop was around the sort of statistics that academics would like about their reading lists. Since then we have put the code in to log views of reading lists and other bits of information.

2 months ago, having collected 10 months of statistics we decided to put together a dashboard for owners of reading lists. The current beta version of the dashboard contains the following information

- Summary – a quick summary of the list and the type of content on it

- Views of the reading list – how many people have viewed the list

- URLs – Details of the number of items on the list with a URL, and details of any URLs that appear to be broken (with a option to highlight those items with broken URLs on the reading list)

- Best rated items – the top user rated item on the list (again with an option to highlight those items on the reading list)

- Worst rated items – the lowest user rated items on the list (yet again with an option to highlight those items on the reading list)

- Item Composition – a graphical representation of the types of items on the reading list

- Item Loans – a graph showing the number of items from the reading list loaned out by the Library over the past year.

Big changes under the hood and a couple of minor ones above

A few weeks ago we pushed out an update to our APIs on our live instance of LORLS and this morning we switched over to a new version of our front end (CLUMP). The changes introduced in this new version are the following

- Collapsible sub-headings

- Improved performance

- Better support for diacritics

Collapsible sub-headings

Following a suggestion from an academic we have added a new feature for sub-headings. Clicking on a sub-heading will now collapse all the entries beneath it. To expand the section out again the user simple needs to click on the sub heading again. This will be beneficial to both academics maintaining large lists and students trying to navigate them.

Improved performance

In our ever present quest to improve the performance, both actual and perceptual, we decided to see if using JSON instead of XML would help. After a bit of experimentation we discovered that using JSON and JSONP would both reduced the quantity of JavaScript code in CLUMP’s routines and significantly improved the performance.

Adjusting Jon’s APIs in the back end (LUMP) to return in either XML, JSON or JSONP format was quite easy once the initial code had been inserted in the LUMP module’s respond routine. Then it was simply a matter of adding 4 lines of code to each API script.

Switching CLUMP to using JSONP was a lot more time consuming. Firstly every call had to have all it’s XML parsing code removed and then the rest of the code in the routine needed to be altered to use the JavaScript object received from the API. This resulted in both nicer to code/read JavaScript and smaller functions.

Secondly a number of start up calls had been synchronous, so the JavaScript wouldn’t continue executing until the response from the server had been received and processed. JSONP calls don’t have a synchronous option. The solution in the end was to use a callback from the function that processes the JSONP response from the server and with a clever bit of coding this actually enabled us to make a number of calls in parallel and continue only after all of them had completed. Previously the calls were made one after the other, each having to wait for the preceding call to have been completed before it could start. While this only saved about half a second on the start up of CLUMP, it made a big difference to the user perception of the systems performance.

Better support for diacritics

This was actually another beneficial side-effect of switching to JSONP over XML for most of our API calls. In Internet Explorer it was discovered that some UTF-8 diacritic characters in the data would break its XML parser, but because JSONP doesn’t use XML these UTF-8 characters are passed through and displayed fine by the browser. Of course we do sometimes find some legacy entries in a reading list, created many years ago in a previous version of LORLS, which are in the Latin-1 character set rather than UTF-8, but even these don’t break the JavaScript engine (though they don’t necessarily display the character that they should).