Jason Cooper

This user hasn't shared any profile information

Posts by Jason Cooper

Improving performance

As we seemed to have reached a stable period for our codebase I decided it was time to start digging into LUMP’s code to see where we could make some performance increases.

I took a standard performance improvement approach of installing a profiler (NYTProf) on LUMP’s development server and configuring apache to use it for Perl CGI scripts. After a short period of profiling three places where the performance could be improved were identified.

The first improvement was the removal of a system call (using backticks) to the whoami program to identify a users login. Normally this wouldn’t make much difference but the call was in a sub procedure that would be called 30 or 40 times from the FastGetStructuralUnit script. The multiple times it had to spawn a new process to run whoami pushed this line to the top of the profiler’s output. A quick change from `whoami` to getlogin() and the line disappeared from the profiler’s list of time consuming lines.

The second improvement was tucked away in the BaseSQL module. The MySQL call to get the ID of the last inserted item was regularly appearing in the profilers output as a slow call. Upon digging in to the code I saw the line

$sql = "select LAST_INSERT_ID() from ".$self->{_table};This is a very instinctive line to code when you want to get the last ID of an item inserted into a specific table (almost always inserted by the previous SQL call). The problem is that LAST_INSERT_ID() returns the ID of the last row inserted in the current session and not of the specified table. Calling it in the way that we were was a big waste of time. Here is an example to demonstrate why it is waste of time.

mysql> select count(LAST_INSERT_ID()) from data_element;

1933973By calling it in the way we were MySQL would pass back a row, consisting of the LAST_INSERT_ID() value, for every row in the table we specified. The solution was simply to change the line to

mysql> select count(LAST_INSERT_ID());

1This change actually knocked a second of some of our slower API’s running time and also reduced the load on the MySQL server.

The third factor that stood out as slowing down most API calls was the module being used to build the XML response, XML::Mini. XML::Mini is a very powerful module for processing very complex XML documents. We didn’t need to use much of its potential as our XML structure was deliberately kept simple. An hour later we had produced XMLify which would take the same hash reference we passed to XML::Mini and produce compatible output. By using our own XMLify sub procedure we managed to reduce the run time of our large FastGetStructuralUnit calls by over 1 second.

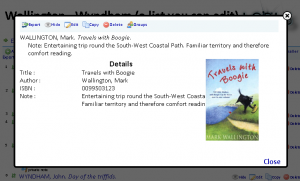

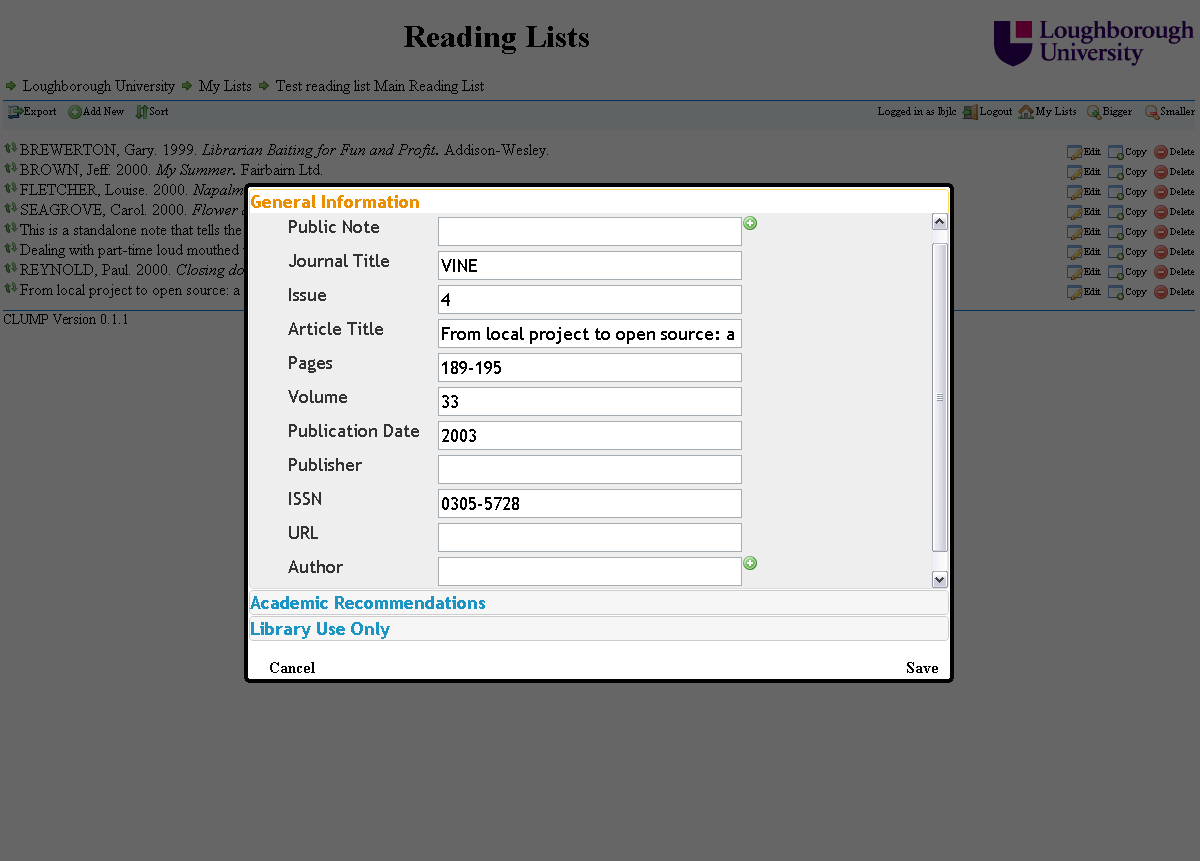

Improving usability via popups

An area of CLUMP that we felt needed some work on was the length of time it could take for a list to reload after a user had gone into an item. Having discussed it for a while we decided to try using a popup for leaf nodes rather than actually moving into them.

Identifying if something is a leaf node or not is quite easy, we just need to see if its structural unit type can have any children. If it can then it isn’t a leaf node and we treat it as normal. If it can’t have any children then it is a leaf node and rather than putting in a link to move into the item we put in a link that displays it in a popup box.

The popup boxes have made a great improvement to the usability of CLUMP for both students and staff. When viewing large lists there is no longer any need for users to wait for a reading list to reload just because they decided to look at an item’s details.

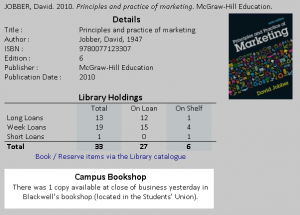

Integration with the campus bookshop

New bulk functions and flags

Having gone live over a month ago there has been quite a few new features added and old features tweaked. The two biggest new features are bulk functions and flags.

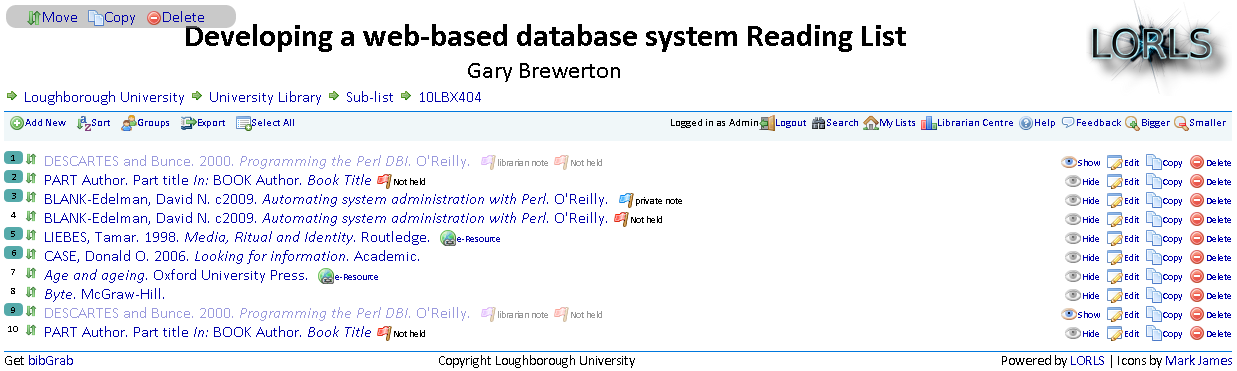

Bulk Functions

Bulk functions help editors of large lists who want to move/reorder/copy/delete multiple items.

To select items the user simply clicks on the items rank number which is then highlighted to show which items are selected. When any items are selected the bulk functions menu appears at the top left. There are currently three bulk functions

- Move

- Moves the selected items to a point specified in the list. The items being moved can also be sorted at the same time.

- Copy

- Copies the selected items to the end of the specified reading lists.

- Delete

- Deletes the selected items.

Flags

Another new feature is the inclusion of flags for certain situations.

- Private Note

- If an item has one or more private notes attached to it and the user has permissions to access them, then this flag is shown. If the user hovers the cursor over the flag then they get to see the private notes without having to edit the item.

- Librarian Note

- If an item has one or more librarian notes attached to it and the user has permissions to access them, then this flag is shown. If the user hovers the cursor over the flag then they get to see the librarian notes without having to edit the item.

- Not Held

- This flag is a little more complicated than the previous ones. If the user is able to see the library only data for the item and and item is a book or journal and it’s marked as not being held by the library and it doesn’t have a URL data element and is not marked as “Will not purchase” then this flag is shown.

Or to put it another way it highlights to librarians the items on a list that they may want to investigate buying.

AJAX performance boosts

Just recently I have been looking at tweaks that I can make to improve the performance of CLUMP. Here are the ones that I have found make a difference.

Set up apache to use gzip to compress things before passing them to the browser. It doesn’t make much difference on the smaller XML results being, but on the large chunks of XML being returned it reduces the size quite a lot.

Here is an extract of the apache configuration file that we use to compress text, html, javascript, css and xml files before sending them.

# compress text, html, javascript, css, xml:

AddOutputFilterByType DEFLATE text/plain

AddOutputFilterByType DEFLATE text/html

AddOutputFilterByType DEFLATE text/xml

AddOutputFilterByType DEFLATE text/css

AddOutputFilterByType DEFLATE application/xml

AddOutputFilterByType DEFLATE application/xhtml+xml

AddOutputFilterByType DEFLATE application/rss+xml

AddOutputFilterByType DEFLATE application/javascript

AddOutputFilterByType DEFLATE application/x-javascriptAnother thing is if you have a lot of outstanding AJAX requests queued up and the user clicks on something which results in those requests no longer being relevant then the browser will still process those requests. Cancelling them will free up the browser to get straight on with the new AJAX requests.

This can be very important on versions 7 and below of Internet Explorer which only allow 2 concurrent connections to a server over http1.1. If the unneeded AJAX requests aren’t cancelled and just left to complete then it can take Internet Explorer a while to clear the queue out only processing 2 requests at a time.

The good news is that Internet Explorer 8 increases the concurrent number of connections to 6, assuming that you have at least a broadband connection speed, which brings it back into align with most other browsers.

Searching now added to CLUMP

Last thing I was working on before Christmas was adding searching into CLUMP. CLUMP’s search function uses LUMP’s FindSuid API to find a list of Module structural units which contain the search term in the selected data element (at the minute the data elements supported are Module Title, Module Code and Academic’s name).

There are two reasons that CLUMP searches for Modules rather than reading lists. The main reason being, if a module has multiple reading lists it is better to take the user to the module to see all the related reading lists.

The second reason is that all the current data elements that can be searched are related to the module structural units and not the reading list structural units, and it would be a bit convoluted to get a list module structural units and then look up the reading lists for each one.

‘Copy To’ Added to CLUMP

I have now added the ‘Copy To’ functionality to CLUMP. It presents a list of the owners reading lists to them with checkboxes and they can select which ones they want to copy the item to. Once they have chosen the lists to copy the item to they click ‘copy’ and it calls LUMP’s CopySU API to copy the structural unit to each reading list selected.

Because the CopySU API can take a while to run at the minute I use the asynchronous aspect of JavaScript to make all the CopySU calls without waiting for the previous one to complete. This lead to the problem of “how do I wait till all the calls have completed?”. There is no “wait till all my callbacks have run” option in JavaScript so I ended up having to increment a counter for each call and then have the callback function decrement the counter. If the counter reaches 0 then the callback function runs the code that we need to run after all of the CopySU API calls have completed (In this case close the popups and reload the current structural unit if it was one of the targets).

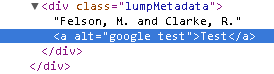

Defining Allowed Inline HTML

Jon and I were chatting the other day about a course he had recently attended. It had covered the common types of attacks against web based systems and good practice to defend against them. I was relieved that the results of the course could be summed up my existing knowledge:

Validate your inputs and validate your outputs

Anything coming into the system needs to be validated and anything leaving the system needs to be validated. With the LORLS v6 having a back-end system and multiple front-end systems things are a little more difficult. One front-end system may have a requirement to allow one set of HTML tags while another front-end system needed to not display some of those tags.

This lead us to the conclusion that the the back-end should make sure that it isn’t vulnerable to SQL Injection attacks and the front-ends should make sure it isn’t vulnerable to the XSS style of attacks.

This left me looking at CLUMP and trying to figure out what HTML tags should be allowed. After thinking about it for a while I came to the conclusion that this will need to be configurable as I was bound to miss one that would break an imported reading list. I also realised that, and that it will go deeper than tags, what attributes will each tag allow (we don’t really want to support the onclick type attributes).

The final solution we decided on is based around a configurable white-list. This lets us state which tags are accepted and which are dropped. For those accepted tags we can also define what attributes are allowed and provide a regular expression to validate that attributes value. If there is no regular expression to validate the attribute then the attribute will be allowed but without any value, e.g. the noshade attribute of the hr tag.

Getting the tag part working was easy enough, the problem came when trying to figure out what the attributes for each tag in the metadata were. After initially thinking about regular expressions and splitting strings on spaces and other characters I realized that it would be a lot easier and saner to write a routine to process the tags attributes one character at a time building up attributes and their values. I could then handle those attributes that have strings as values (e.g. alt, title, etc.).

As a test I put in altered an items author to contain

<a onclick=”alert(‘xss’);” href=”javascript:alert(‘xss’);” alt = “google test”>Test</a>

The a tag is currently allowed and the href and alt attributes are also allowed. The alt validation pattern is set to only allow alpha numeric and white-space characters while the href validation pattern requires it to start with http:// or https://. This is how CLUMP generates the a tag for the test entry.

The onclick attribute isn’t valid an so has been dropped, the href attribute didn’t start with http:// or https:// so has also been droped. The alt attribute on the other hand matches the validation pattern and so has been included.

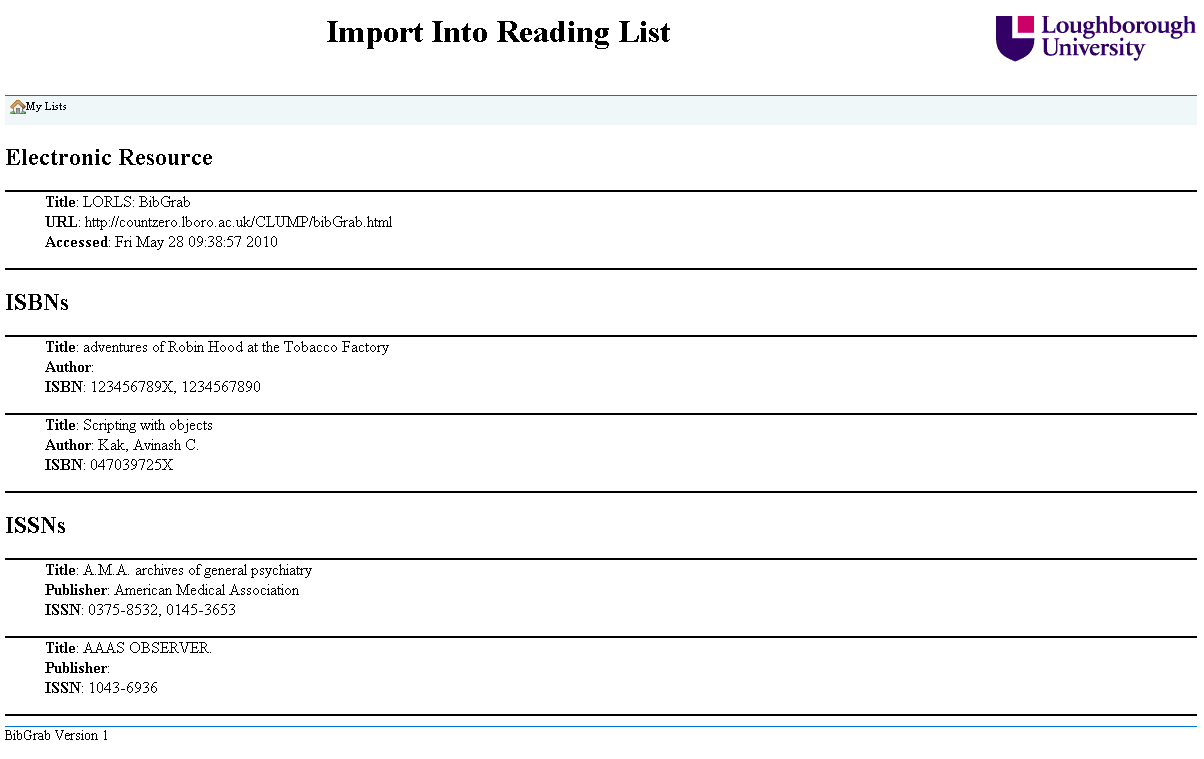

BibGrab

For a long time we have been told that staff want an easy way to add an item to a reading list. To make item entry easier the data entry forms for LORLS v6 are specific to the type of item being added. This should help avoid confusion when people are asked for irrelevant metadata (e.g. asking for an ISBN for a web page).

Recently I have been working on BibGrab our tool to allow staff to add items to their reading list from any web page that has an ISBN or ISSN on it. BibGrab consists of two parts. The first part is a piece of JavaScript that is add as bookmark to their browser, then when they select that bookmark in future the JavaScript is run with access to the current page. The second part is a CGI script that sits along side CLUMP that processes the information and presents the options to the users.

The bookmark JavaScript code first decides what the user is wanting it to work with. If the user has selected some text on the page then it works with that otherwise it will use the whole page, this helps if there are lot of ISBNs/ISSNs on the page and the user is only interested in one of them.

It then prepends to that the current pages URL and title, which lets BibGrab offer the option of adding the web page to a reading list as well as any ISBNs/ISSNs found. This information is then used to populate a form that it appends to the current page. The form’s target is set to ‘_blank’ to open a new window and the action of the form is set to the CGI script. Finally the JavaScript submits the form.

The CGI script takes the input from the form and then searches out the web page details the JavaScript added and any possible ISBNs and ISSNs. The ISBNs and ISSNs then have their checkdigit validated and any that fail are rejected. The remaining details are then used to put together a web page, that uses JavaScript to lookup the details for each ISBN and ISSN and display these to the user. The web page requires the user to be logged in, as it is using CLUMP’s JavaScript functions for a lot of the work it can see if they have already logged into CLUMP that session and if they haven’t it can then ask them to login.

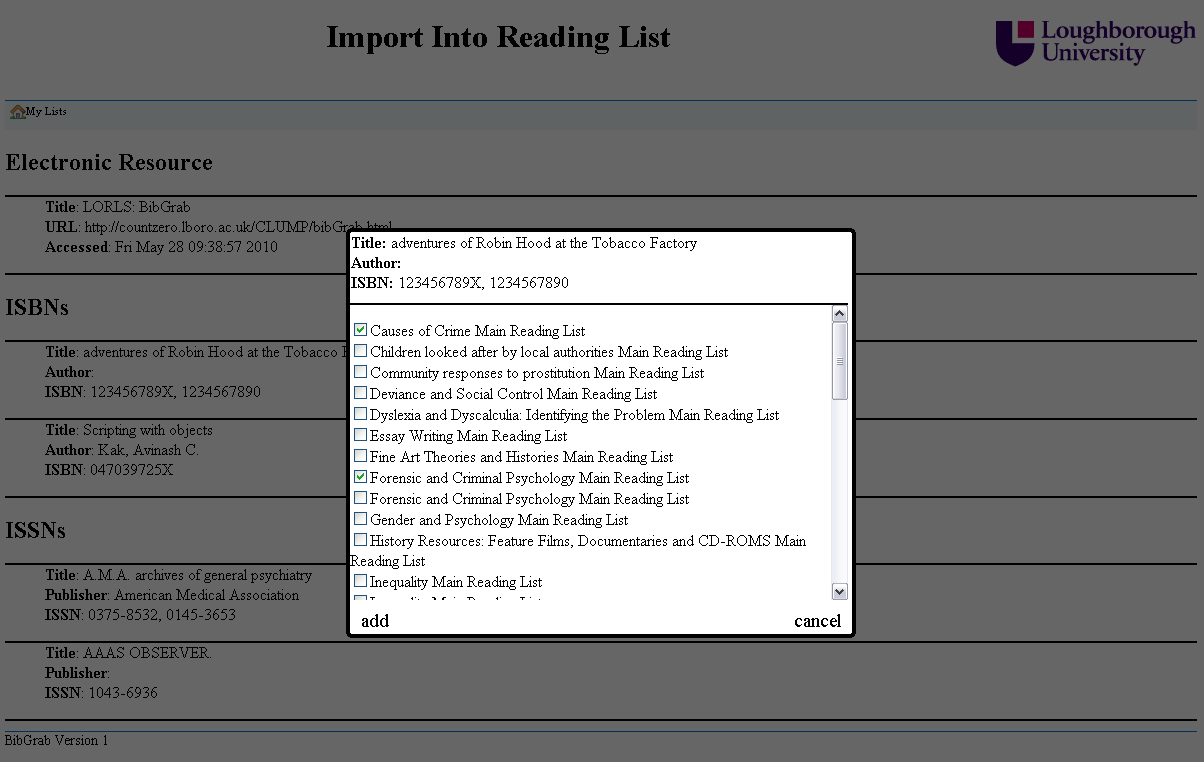

Once logged in they can see all the items that BibGrab found.

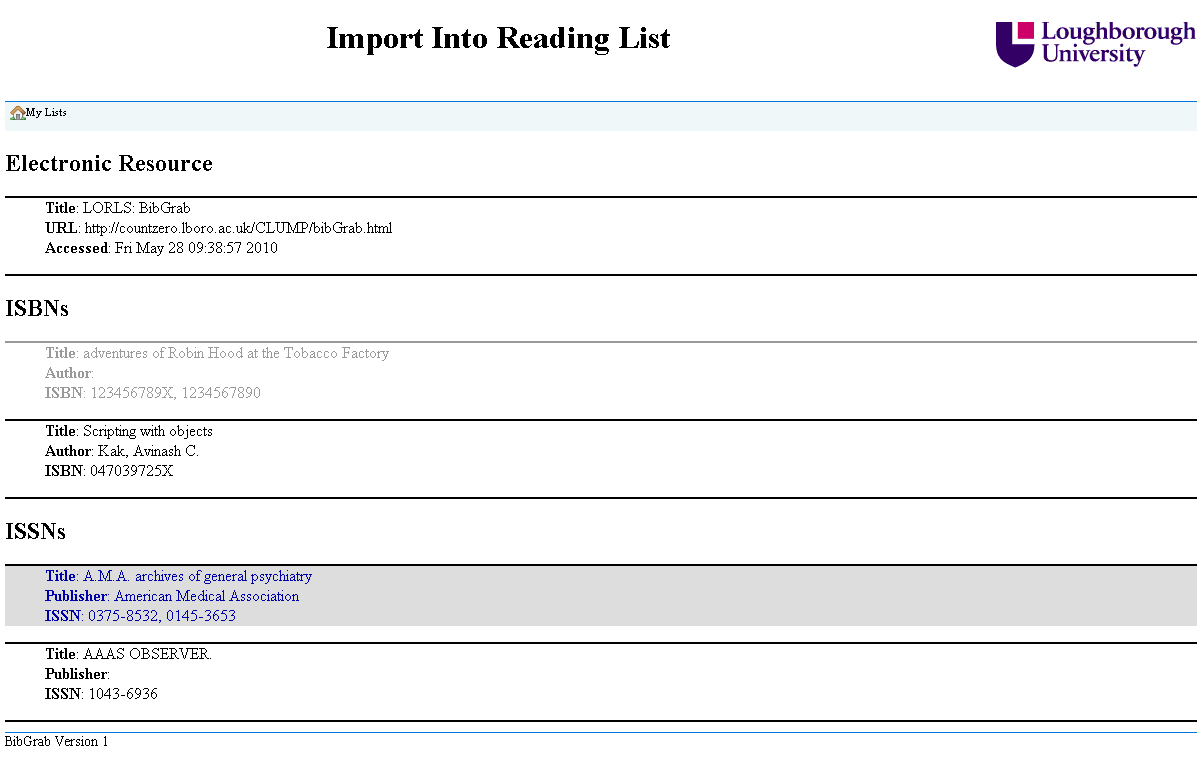

When they select an item they are then presented with all the details for that item and if it is a journal they are also presented with some boxes for adding in details to specify a specific article, issue or volume. They are also presented with a list of their reading lists, of which they can select as many as they like and when they click add the item is added to all their selected reading lists. The item is added to the end of each reading lists and is in a draft mode. This makes it easier for people to add items to their reading lists when they find them without worrying how it will affect their list’s layout.

After the item has been added to their chosen reading lists it is grayed out as a visual indication that it has already been used. They can still select it again and add it to even more reading lists if they want or they can select another item to add to their reading lists.

Another Debugging Tip

As we reach the stage where we will be demoing LORLS v6 more often I figured it was time to make my debugging code easy to switch off. This resulted in two new JavaScript functions debug and debugWarn. They both are wrappers that first check the global variable DEBUG and if it is set then they call the relevant method from the console object (either log or warn).

Now to switch of debug messages we simple set DEBUG to 0 and to switch them back on we set it to 1.