LORLS

Installer progress…

Writing a web driven installer for LUMP in Perl is proving to be, er, “interesting”. Today has seen some useful progress and its probably worth noting some of the tricks used (mostly because they’re rather esoteric and bug hunting might be fun in a few years time when I’ve forgotten why I did what I did!).

The first thing I had to get going was bootstrapping the the required CPAN modules as documented in my previous post. This is now working OK. A few gotchas to note:

- When you fork off a child process from a CGI script that you want to be “long lived” (ie outlive the parent process that has returned some HTML to the user), you need to close down STDOUT, STDIN and STDERR, otherwise Apache hangs around waiting for the child to finish. This can actually be handy, as you can then reopen file handles with those names pointing elsewhere (such as to a session file) and record the output of other tools that the child calls upon (such as the very chatty CPAN::Shell).

- CPAN::Shell doesn’t appear to return a useful error status, so programmatically you can’t tell if your module install worked or not (the verbose output is designed to tell a human). To find out if a module is installed, you have to try to “use” that module again – once installed the use statement should work.

- Talking of use statements its worth noting that these are best done inside eval()’s, as that allows you to capture errors (as in the case of a module that has failed to install). You have to be careful whether you have compile or run time binding though, especially if you’re eval()ing stuff with variables in that you’re planning on instantiating during the run. Perl -cw is your friend as usual by warning you about dodgy compile time issues.

Once I could successfully install CPAN modules in to a private LUMP modules directory, the next thing to consider was the database. The installer CGI script asked the user for the database name, database account and database password to use and then checks to see if it can gain access. For most noobs this won’t work as they won’t have a database/account/password, so the CGI script traps that error and asks if they’d like it to create the database for them. This requires them supplying the database root password and then (assuming its right) creates the database and grants SELECT/INSERT rights to the named user identified by the given password.

The CGI script then checks that this user can connect to the database – if they can’t then something has gone wrong with the basic creation and we have to tell them to fiddle by hand. Assuming that is OK, it then changes back to the database root user to do the schema creation (as the user only has SELECT/INSERT privs). This however called for another hack: we’ve kept the database schema and index definitions in SQL text files. During development we’ve just source’d these in with the mysql command line client – but this won’t work from Perl’s DBI interface and I don’t want to rely on finding the mysql command line client and system()’ing out to it.

So my next trick was another Perl script: the installer_maker. This is a simple filter script that reads a “template” installer script in and writes out a proper installer with a couple of lines in the template replaced by some Perl array structures – one for the table schema and one for the indexes. These arrays simply contain one SQL statement in each element that the installer_maker has ripped out of the LUMP schema and indexes SQL text files. Bingo! We can now carry on tweaking the schema/indexes in the files we’re used to in development, whilst not having to remember to do lots of code twiddling to keep the installer in sync – we just need to run installer_maker before making a distribution. Hopefully that should result in less chance of our dev schemas getting out of step with released production schemas and the bugs that could result from that.

So its looking promising now, and from a user perspective is probably far friendly than the command line Perl installer from LORLS. The next thing to write is a routine in the installer that can check the schema and indexes in a live database against the schema/indexes we have now got embedded in the installer. If they differ in some way we need to tell the admin running the installer and let them decide whether to ignore the difference (bad idea probably), tweak it by hand or (preferably) let the installer tweak their schema/indexes. Hopefully in the long term this will be a useful place to hang LUMP schema/index upgrades on – folk could use the installer not only to load new versions of the Perl code but also update their database structure. That’ll need some serious testing though… 🙂

Hello world

We’ve been developing a new version of LORLS (Loughborough Online Reading List System) for a past few years on and off. As part of this development process we’ve kept a diary of our thoughts, plans, issues and achievements. Up until now we’ve kept this diary private among ourselves, however as we’re now at stage when we can start releasing information about the new version, both internally and externally, we thought it would be a good idea to go public with the diary.

So here it is.

LORLS’s Installer

We’re getting to the point with LUMP and CLUMP where Jason and I are thinking about installers. If we were just going to use the code at Loughborough this would be a moot point – we’d just copy the various directories around and tweak under the hood to get the thing to work as we want, just as we have done during development.

However its not going to just be us that use it (or at least hopefully it won’t be!) so we need to think of a nice way for people to install it. The previous versions of LORLS have a Perl command line script that I wrote years ago that asks lots of questions and then does its funky thang. It works, but it could be friendlier, especially for the newbie admins. I’ve also seen a number of PHP based packages that allow you to do most of the installation via a web browser which is rather nice for the new admins, so I thought, “Hey, why not make an installer CGI script that knows very little about the system other than there is Perl there, a MySQL database server is running, the CGI.pm module (which has been part of the Perl distribution since 5.4 so should be there already on most machines installed in the 21st century) and is an executable CGI script?” Why not indeed…

Obviously the prospective LUMP admin needs to install (or have installed for him) Perl, MySQL and a webserver configured to execute CGI scripts in the directory that we tell them to unpack LUMP into, but once that’s done surely we can then check to make sure that all the other modules are present, install them if they aren’t, set up the MySQL database ready for LUMP to use and then configure all the LUMP scripts so that they are ready to go, all with a nice point and drool web interface?

Checking if a Perl module is available is relatively easy, thanks to the eval { } function in Perl. For example, say we want to check if Data::Dumper is installed. That can be done using some code such as:

eval { use Data::Dumper; };

if($@) {

# Module missing

} else {

# Module present, and available for use

}

Shimples!

However things start to get “interesting” if the module isn’t installed. I thought this would be easy, as the Comprehensive Perl Archive Network (CPAN) has a nice module that I’ve used for years to interactively install and update Perl modules with – things like:

perl -MCPAN -e install Data::Dumper

It has a programmatic option as well as an interactive side, so we’re sorted right? Well no, we’re not. Unfortunately the programmatic side really just generates the stream of stuff you see when you run the interactive side. If you include something like:

my $result = CPAN::Shell->install('Data::Dumper');

in your CGI script, eventually you’ll get a result in the web browser of a load of unformated raw text from this command interspersed with your active HTML. The $result variable on the other hand will stay completely empty, with no indication as to whether the installation has worked or not. Pants – not what we want here.

The long and short of it is, to get round this “feature” in CPAN::Shell it seems that you have to do a bit of fork() action. In other words folk off a child process to run the CPAN::Shell method in and then, back in the parent, capture its STDOUT stream into a variable which can then be scanned with a regexp or two looking for signs of success in the output text. Not terribly clean but it works.

There’s another “gotcha” as well: the web server is unlikely to be running as root (or at least it shouldn’t be!) and so the CGI script can’t install Perl modules into the system library directories. This is a more minor pain: you can tell CPAN::Shell that it should do a local installation to a place that the user executing it can write to. So that’s another requirement for running this CGI script: create a directory that’s readable and writable by the user running the web server (usually called something like apache or http) but which isn’t part of the web server document root. In other words, if the web server document root is /var/www/html, we might want to put this LUMP specific CPAN directory tree in /var/ww/LUMPCPAN where it can’t be seen by web browsers. You have to hack up a MyConfig.pm to put in this directory and then point @INC and $ENV{‘PERL5LIBS’} towards it, but that can be done automagically by the CGI installer script once the directory exists.

Now some readers (we do have readers, right?) might be wondering why I don’t use one of the fancy pants CPAN modules such local::libs or Module::Install to do this rather than tackling CPAN::Shell head on. Well its a chicken and egg problem really: I wanted to have the minimum requirements for the installer script to run and, if I’d have asked the user to install a load of libraries and modules to make using CPAN easier I might as well have just given them a list of modules to install. Which actually I have done anyway, just in case they want to install them system wide and/or not trut my installer script to get them right. But nevertheless I’d like to give the newbies (and also folk who have sluggish server admins if they don’t run their own boxes) the option of installing via the CGI installer script. More work for me, but hopefully less for them.

So, its a bit of kludge at the moment but it seems to be the way to go unless anyone else can come up with a better scheme. Once I’m happy it can get the required modules in place, my next trick will be to ask for database server details (database name, username, password, server, port) and then check if the database already exists, or create a fresh one. In the former case I’m also planning on checking the schema in the database against the one in this LUMP distribution and then offer to update it if needs be, and allow fresh databases to either be empty, have some simple test data in them or copy data from another database. Hopefully I can also bolt the importer from older LORLS versions in here so that there’s no command line interaction required at all. With a bit of luck all of those should be alot less hassle than CPAN::Shell has proved to be.

I hope… 🙂

‘Copy To’ Added to CLUMP

I have now added the ‘Copy To’ functionality to CLUMP. It presents a list of the owners reading lists to them with checkboxes and they can select which ones they want to copy the item to. Once they have chosen the lists to copy the item to they click ‘copy’ and it calls LUMP’s CopySU API to copy the structural unit to each reading list selected.

Because the CopySU API can take a while to run at the minute I use the asynchronous aspect of JavaScript to make all the CopySU calls without waiting for the previous one to complete. This lead to the problem of “how do I wait till all the calls have completed?”. There is no “wait till all my callbacks have run” option in JavaScript so I ended up having to increment a counter for each call and then have the callback function decrement the counter. If the counter reaches 0 then the callback function runs the code that we need to run after all of the CopySU API calls have completed (In this case close the popups and reload the current structural unit if it was one of the targets).

Defining Allowed Inline HTML

Jon and I were chatting the other day about a course he had recently attended. It had covered the common types of attacks against web based systems and good practice to defend against them. I was relieved that the results of the course could be summed up my existing knowledge:

Validate your inputs and validate your outputs

Anything coming into the system needs to be validated and anything leaving the system needs to be validated. With the LORLS v6 having a back-end system and multiple front-end systems things are a little more difficult. One front-end system may have a requirement to allow one set of HTML tags while another front-end system needed to not display some of those tags.

This lead us to the conclusion that the the back-end should make sure that it isn’t vulnerable to SQL Injection attacks and the front-ends should make sure it isn’t vulnerable to the XSS style of attacks.

This left me looking at CLUMP and trying to figure out what HTML tags should be allowed. After thinking about it for a while I came to the conclusion that this will need to be configurable as I was bound to miss one that would break an imported reading list. I also realised that, and that it will go deeper than tags, what attributes will each tag allow (we don’t really want to support the onclick type attributes).

The final solution we decided on is based around a configurable white-list. This lets us state which tags are accepted and which are dropped. For those accepted tags we can also define what attributes are allowed and provide a regular expression to validate that attributes value. If there is no regular expression to validate the attribute then the attribute will be allowed but without any value, e.g. the noshade attribute of the hr tag.

Getting the tag part working was easy enough, the problem came when trying to figure out what the attributes for each tag in the metadata were. After initially thinking about regular expressions and splitting strings on spaces and other characters I realized that it would be a lot easier and saner to write a routine to process the tags attributes one character at a time building up attributes and their values. I could then handle those attributes that have strings as values (e.g. alt, title, etc.).

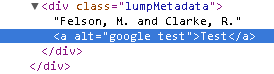

As a test I put in altered an items author to contain

<a onclick=”alert(‘xss’);” href=”javascript:alert(‘xss’);” alt = “google test”>Test</a>

The a tag is currently allowed and the href and alt attributes are also allowed. The alt validation pattern is set to only allow alpha numeric and white-space characters while the href validation pattern requires it to start with http:// or https://. This is how CLUMP generates the a tag for the test entry.

The onclick attribute isn’t valid an so has been dropped, the href attribute didn’t start with http:// or https:// so has also been droped. The alt attribute on the other hand matches the validation pattern and so has been included.

Copying Structural Units

There’s now a new API cgi script – CopySU. This lets you both copy and link SUs to new parents. For example you might want to copy an existing book within the same reading list if there is another volume with very similar details, or you might want to link a single book SU into more than one reading list. In the latter case a change made to the book SU is reflected in all the reading lists it is linked to, irrespective of the list that it was “edited in”.

Jason now has to munge this into CLUMP so that the copy buttons in there run with this new functionality, as at the moment they are just placeholders that generate a new child SU. Seems to work from the command line/by hand so fingers crossed, eh? 🙂

When to implement?

LORLS v6 (aka LUMP + CLUMP) is now almost at a stage where we can consider going live with it here at Loughborough. Unfortunately we’re too late to launch at the start of the Summer vacation as we need time to fully advertise and train staff on the new system. That means we’ll probably launch the system at the start of the new academic year (October), Christmas or in time for the second semester (February 2011). We’re currently consulting with academic departments and library staff on when they’d prefer and are getting a strong steer that Christmas would be least disruptive for all concerned.

In the meantime we’ll obviously continue to develop and test the system. Alongside this we’re looking to create a sandbox so that staff can play on (and learn about) the system before the official launch – whenever that will be.

Speeding things up (or at least trying to)

Jason has been doing a load of work in the last few months on getting the CLUMP AJAX and its looking rather nice. However Gary and Jason had decided that on longer reading list the performance wasn’t good enough. A 635 item list took over 3 minutes to completely render when Jason pulled the Reading List SU and then did separate XML API calls to retrieve each individual item (the advantage of this over just using a GetStructuralUnit API call with multiple levels is that the users could be presented with some of the data asynchronously as it arrived, rather than having to wait for the back end to process the whole lot).

So the question was: could it be made faster? One option was trying to create a single “mega” SQL select to get the data but that could almost immediately be discounted as we pull different bits of data from different tables for different things in GetStructuralUnit (ie the child SU info and SU/SUT basic info couldn’t easily be munged in with the data element/data type/data type group stuff). So we did two separate selects to get the basic data, ignoring the ACL stuff. These were both subsecond responses on the MySQL server.

Now obviously turning the SQL results into XML has a bit of an overhead, as does the client-server comms, but nowhere near enough to cause this slow down. This pointed the finger firmly in the direction of the ACLs. Every time we pull an SU (or its children) we need to do a load of ACL checks to make sure that the user requesting the data is allowed to see/edit the SU and/or the data type group. When we added ACLs back into the new “fast” XML API the 635 element list took just under two minutes to render. So we’d shaved a third off the time by crafting the SQL rather than using the Perl objects behind the XML API, but it was still a bit slow.

Gary then came up with a bright idea: why not allow FastGetStructuralUnit (the new whizzy version of the GetStructuralUnit XML API CGI script) to accept more than one SU ID at once? That way Jason’s CLUMP AJAX front end could request the outer reading list SU quickly, and then fill in the items but do them in blocks of several at once. We implemented this and had a play around with different numbers of items in the blocks. Five seemed quite good – this was fast enought to fill in the first screenful to keep the user occupied and managed to get the whole 635 item list rendered in the browser in just over a minute – two thirds of the original time.

Jason can now also try out more advanced ideas in the future, such as dynamically altering the number of items requested in the blocks based on response time and whether the user is scroll down to them or not. With lists under 100 items we’re getting a sub-10 second rendering time, so that’s hopefully going to be fast enough for the majority of users… and may even encourage some academics with long and unwieldy reading lists to split them up in to smaller sub lists.

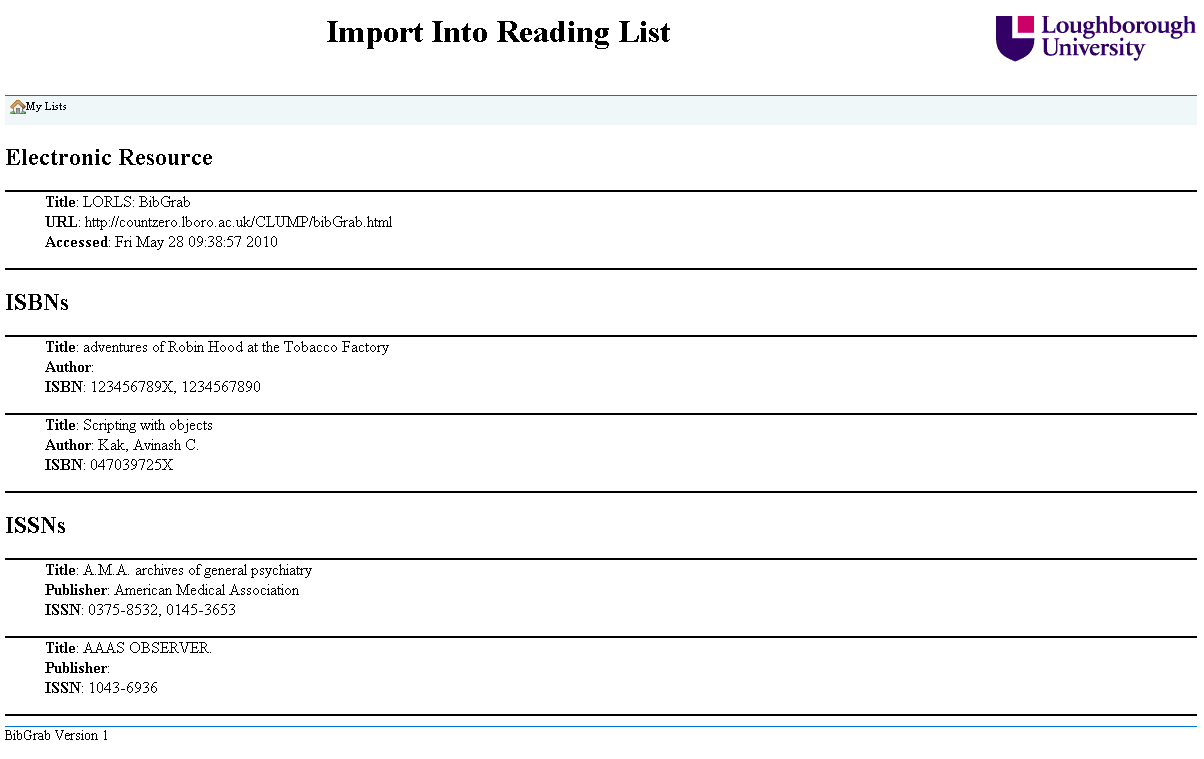

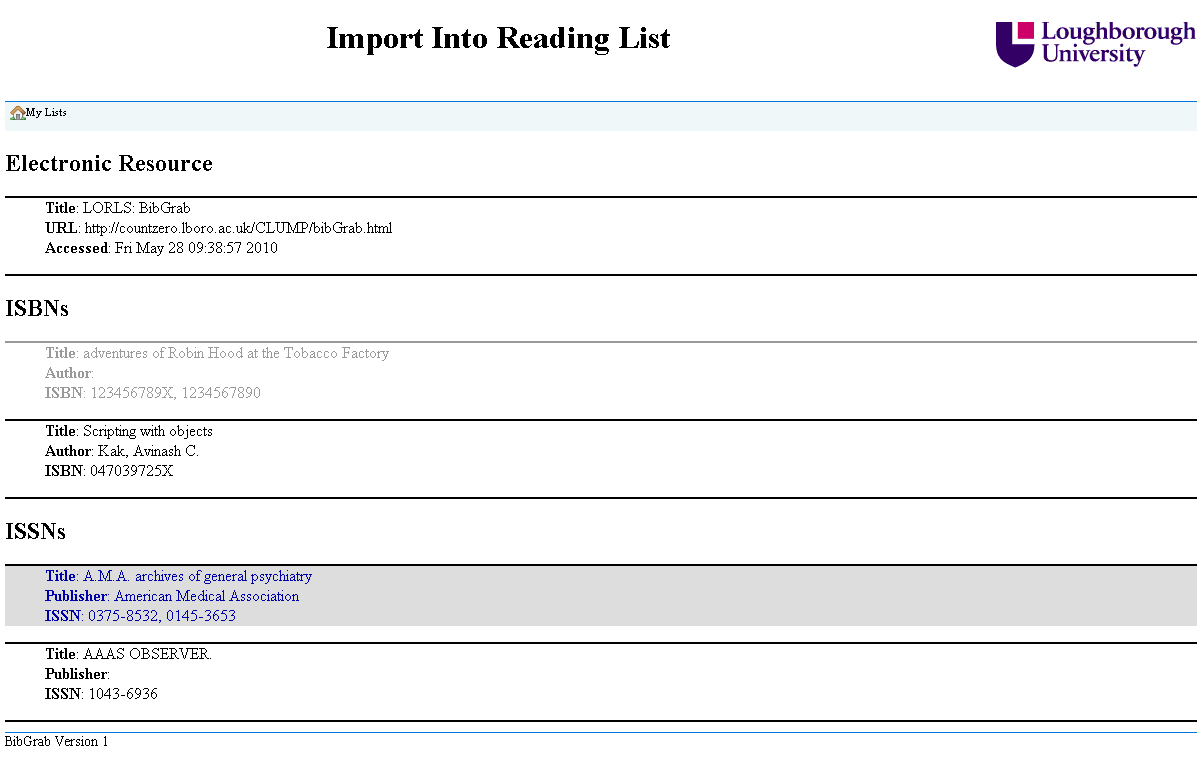

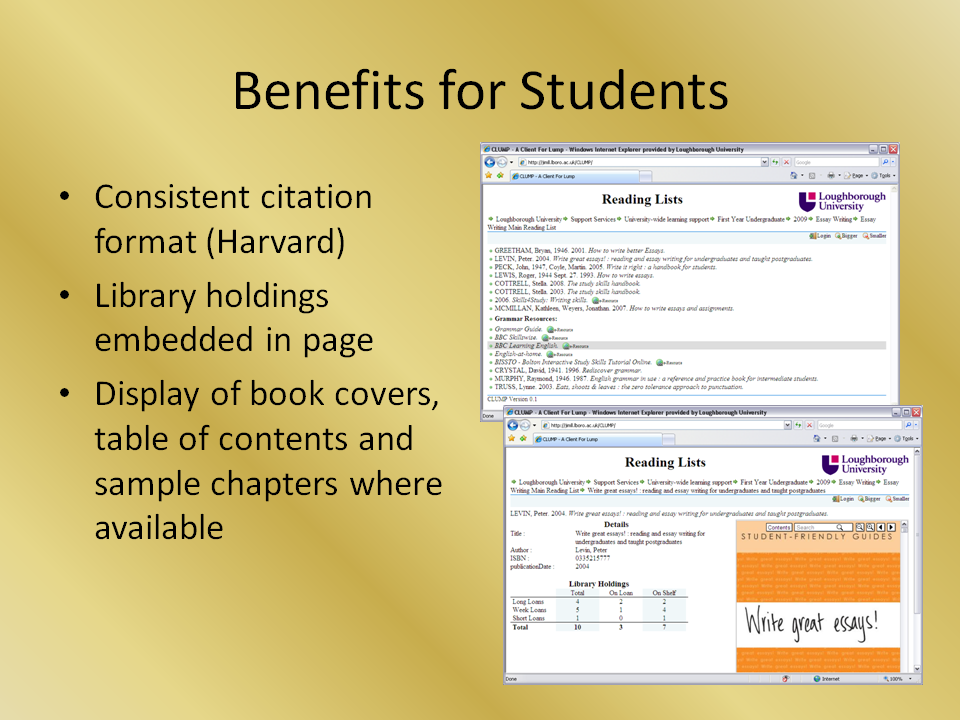

BibGrab

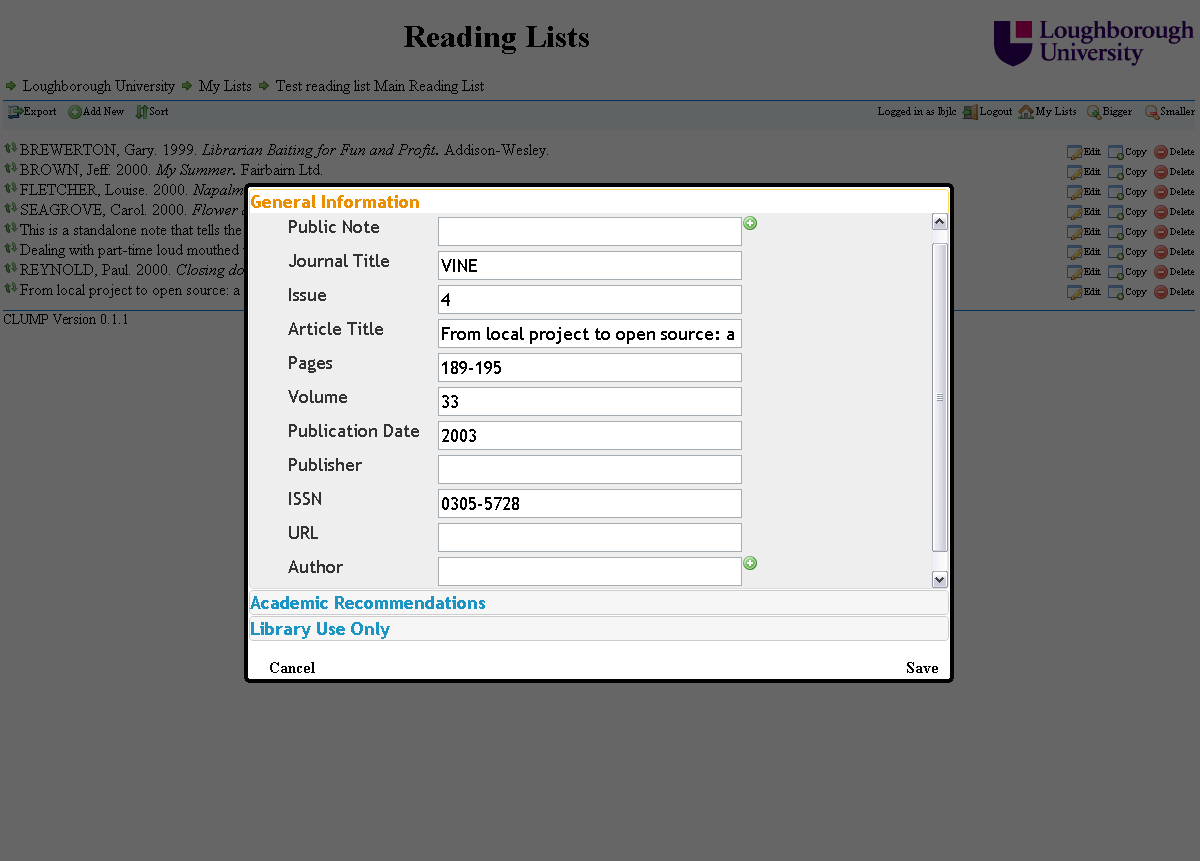

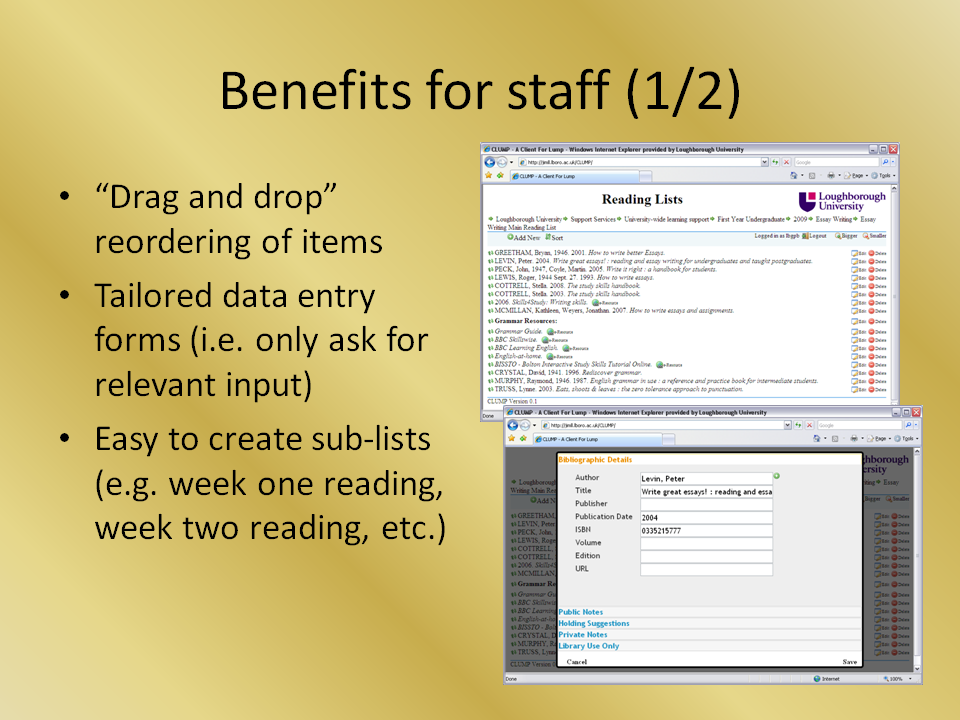

For a long time we have been told that staff want an easy way to add an item to a reading list. To make item entry easier the data entry forms for LORLS v6 are specific to the type of item being added. This should help avoid confusion when people are asked for irrelevant metadata (e.g. asking for an ISBN for a web page).

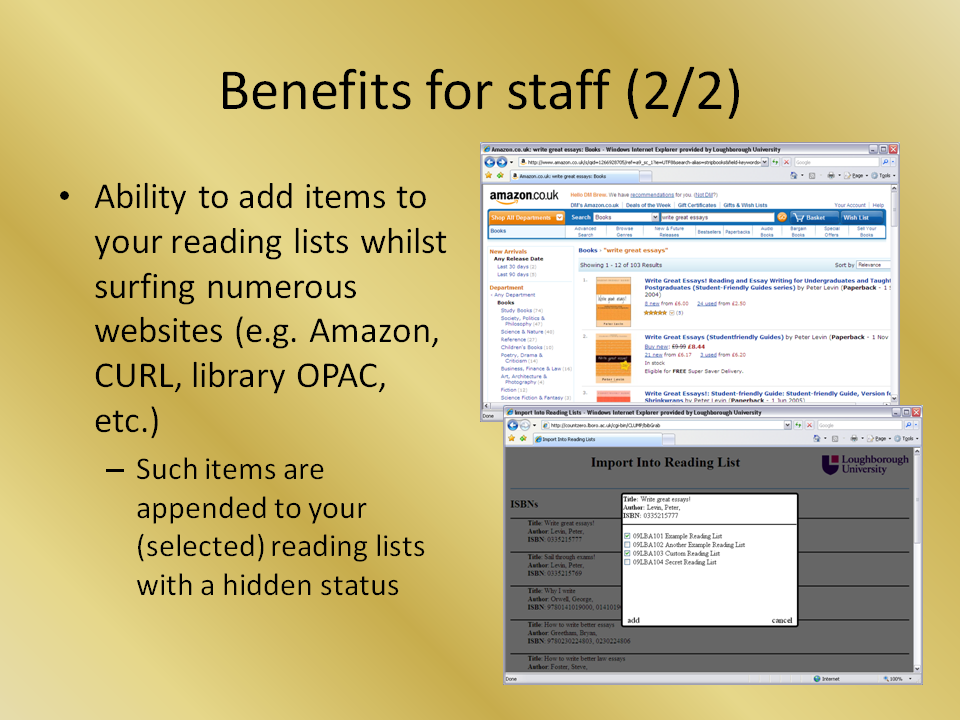

Recently I have been working on BibGrab our tool to allow staff to add items to their reading list from any web page that has an ISBN or ISSN on it. BibGrab consists of two parts. The first part is a piece of JavaScript that is add as bookmark to their browser, then when they select that bookmark in future the JavaScript is run with access to the current page. The second part is a CGI script that sits along side CLUMP that processes the information and presents the options to the users.

The bookmark JavaScript code first decides what the user is wanting it to work with. If the user has selected some text on the page then it works with that otherwise it will use the whole page, this helps if there are lot of ISBNs/ISSNs on the page and the user is only interested in one of them.

It then prepends to that the current pages URL and title, which lets BibGrab offer the option of adding the web page to a reading list as well as any ISBNs/ISSNs found. This information is then used to populate a form that it appends to the current page. The form’s target is set to ‘_blank’ to open a new window and the action of the form is set to the CGI script. Finally the JavaScript submits the form.

The CGI script takes the input from the form and then searches out the web page details the JavaScript added and any possible ISBNs and ISSNs. The ISBNs and ISSNs then have their checkdigit validated and any that fail are rejected. The remaining details are then used to put together a web page, that uses JavaScript to lookup the details for each ISBN and ISSN and display these to the user. The web page requires the user to be logged in, as it is using CLUMP’s JavaScript functions for a lot of the work it can see if they have already logged into CLUMP that session and if they haven’t it can then ask them to login.

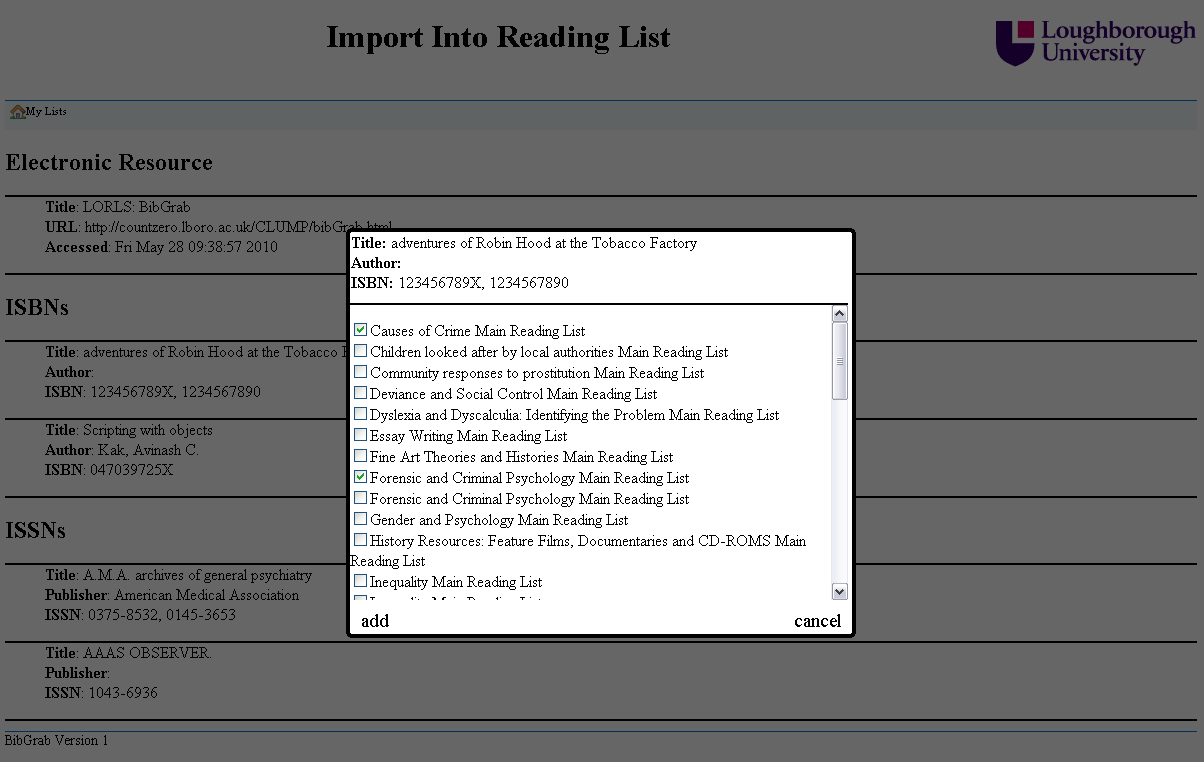

Once logged in they can see all the items that BibGrab found.

When they select an item they are then presented with all the details for that item and if it is a journal they are also presented with some boxes for adding in details to specify a specific article, issue or volume. They are also presented with a list of their reading lists, of which they can select as many as they like and when they click add the item is added to all their selected reading lists. The item is added to the end of each reading lists and is in a draft mode. This makes it easier for people to add items to their reading lists when they find them without worrying how it will affect their list’s layout.

After the item has been added to their chosen reading lists it is grayed out as a visual indication that it has already been used. They can still select it again and add it to even more reading lists if they want or they can select another item to add to their reading lists.