The end of the end

So just a few days shy of 24 years we bid a fond farewell to LORLS as we finally decommission the service. We intend to keep this site available for a few more years but won’t be updating it further.

I’d like to take this opportunity to thank all the people who have worked on the system, made suggestions, suffered arguments about what structural units are and generally used the system. In particular I’d like to thank my co-developers: Jon and Jason without whom LORLS wouldn’t have existed or have lasted so long.

<obligatoryHitchhikersQuote>

So long and thanks for all the fish

</obligatoryHitchhikersQuote>

The middle of the end

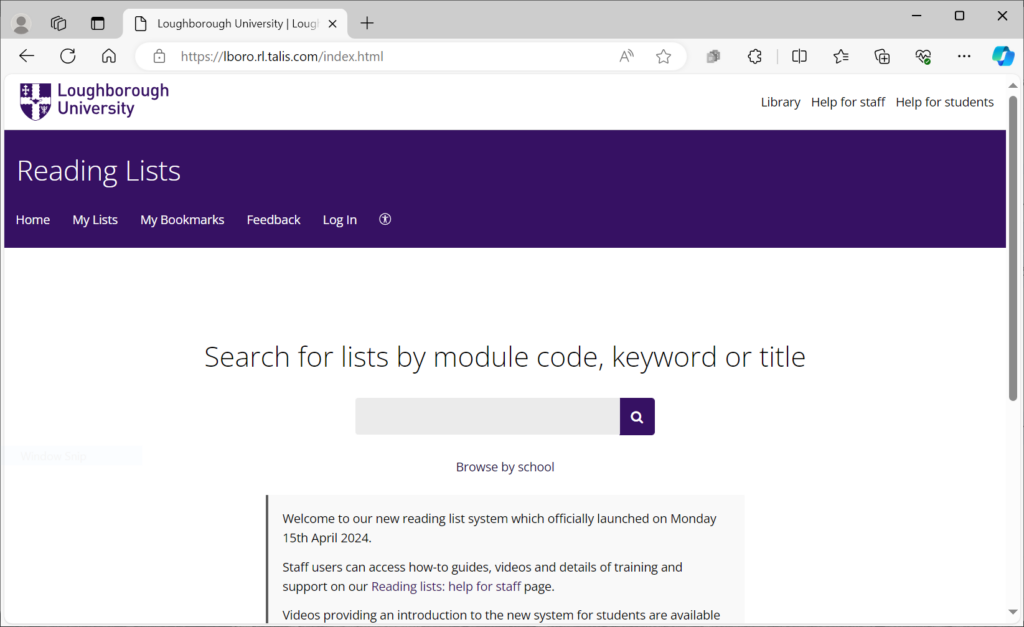

So today the University’s new reading list system (https://lboro.rl.talis.com) went live and we set redirects from LORLS to point to it. We’ll still be running the LORLS server for a couple more months for the benefit of library staff but then it will be decommissioned.

The data migration exercise seemed to go very well although it was interesting to note that despite the two systems having very similar internal data structures there were issues around our allowance for “lists within lists” and how the two systems treat items at draft status. But luckily these issues were easily resolved with a little effort.

The beginning of the end

So today we held the kickoff meeting to move from our in-house reading list system to its chosen successor Talis Aspire.

My role in the project is to provide the data from LORLS in JSON (JavaScript Object Notation) format. Luckily we already wrote a nightly JSON export of all the current lists for integration with our Moodle VLE. So, current me is very grateful to past me and colleagues for developing this feature as it’ll greatly reduce ongoing efforts.

The hope is to launch with the new system, including migrated data, for Easter 2024.

Twenty years of LORLS

It doesn’t seem like five minutes ago since we were celebrating the 15th anniversary of the launch of LORLS and now here we are now at its 20th. Unfortunately the current lockdown prevents us from celebrating its birthday in the usual manner (i.e. with cake).

LORLS was initially conceived of in 1999 in response to an enquiry to the Library from the University’s Learning & Teaching Committee. The system was written using the open source LAMP development stack and launched the following year. Since then it has been used by a dozen other institutions, survived six major revisions, three different library management systems and seen the rise and fall of numerous other reading list management systems.

So what does the future hold for LORLS, well the sad truth is that all of the staff involved in its development have either moved on to take on new responsibilities or left the institution. And finally after 20 years there are now commercial offerings that at least meet, if not exceed, the capabilities of our little in-house system. So whilst LORLS is not yet dead, it is more than likely it will be taking a very well deserved retirement at some point in the coming years.

A Newcomer’s guide to installing “LORLS In A Box”

As has been mentioned in this blog before, back in the days when the MALS team was still the Library Systems team, they developed the Loughborough Online Reading List System (LORLS) to manage the resources for directed student reading.

A recent rebranding of the University’s Library online presence means we now have a requirement to change the styling of our local installation of LORLS. I am still relatively new to the team and as yet have not had cause to look at the front end of LORLS, known affectionately as CLUMP, and this seemed like an ideal introduction for me.

So where better to start than with the documentation the team already put together. My first port of call is the installation instructions where I discover that some thoughtful techie has already built me a VM to play with. Reading through the guide to the VM you can tell the techie in question was Jon, the passwords used are a good clue but the giveaway is the advice to make a cup of tea and eat a biscuit whilst waiting for the download.

The download and subsequent import into Virtual Box seem to happen with a minimum of fuss but here is where I make my first rookie mistake as I choose to reinitialise the MAC address of all network interfaces.

CentOS maintains a mapping of MAC address to interface IDs and so it spots that the VM no longer has the MAC address it associated with eth0 but does have an entirely new MAC address which it associates with eth1. The configuration of the VMs NIC is tied to eth0 and so I don’t have a working network connection.

This is quick and easy to fix. First I head to /etc/sysconfig/network-scripts where I rename the file ifcfg-eth0 to ifcfg-eth1 (this is not essential but helps with sanity). I then edit this file and change the DEVICE value to be eth1 and update the HWADDR value to be the new MAC address which can be found using ifconfig -a. Restart the network service and all is well.

Of course if you don’t reinitialise the MAC address when importing the VM then you shouldn’t see this issue and it should just work straight away.

When starting the VM, as described in Jon’s instructions, displayed above the login prompt I am shown the IP address assigned to the VM by DHCP and the URL for my LORLS instance. Plugging these into my browser takes me to a vanilla installation of LORLS running on my VM. One note here, be sure to type CLUMP and not clump it is case sensitive.

So all pretty straightforward to get up and running, in fact I am pleased I made the mistake with the MAC address as there would have been little of note to write about otherwise. Now onto setup and customisation but I may save that for another “Newcomer’s guide to LORLS” blog post in the future.

LORLS Implementation at DBS

I note with great interest that Dublin Business School has recently had an article accepted in the New Review of Academic Librarianship, regarding their faculties’ perceptions of LORLS.

In the article Marie O’Neill and Lara Musto discuss a survey of faculty staff at DBS which reveals that their awareness of the system is greatly impacted by the amount of time they spend teaching. They also show that promoting appropriate resources to students and improving communication between faculty and library staff are seen as major advantages of having a RLMS. I particularly liked the following quote that came out of one of their focus groups:

“One of the challenges nowadays is recognising that students are reading more than books and articles. They are reading the review section of IMDb for example. Reading lists have to change and our perception of reading lists.”

One of the other outputs of the article was a process implementation chart, which was created to inform other institutions how they might best implement LORLS. This chart is reproduced below with the kind permission of the authors.

The article concludes with a strong desire from DBS faculty for greater integration with their Moodle VLE system. This is something that we are actively investigating and we have begun to pilot a Moodle plug-in at Loughborough, which we hope to include in a future release of the LORLS software.

Word document importer

At this year’s Meeting the Reading List Challenge (MTRLC) workshop, my boss Gary Brewerton demonstrated one of the features we have in LORLS: the ability to ingest a Word document that contains Harvard(ish) citations. Our script reads in a Office Open XML.docx format Word document and spits out some structured data ready to import into a LORLS reading list. The idea behind this is that academics still create reading lists in Word, despite us having had an online system for 15 years now. Anything we can do to make getting these Word documents into LORLS easier for them, the more likely it is that we’ll actually get to see the data. We’ve had this feature for a while now, and its one of those bits of code that we revisit every so often when we come across new Word documents that it doesn’t handle as well as we’d like.

The folk at MTRLC seemed to like it, and Gary suggested that I yank the core of the import code out of LORLS, bash it around a bit and then make it available as a standalone program for people to play with, including sites that don’t use LORLS. So that’s what I’ve done – you can download the single script from:

https://lorls.lboro.ac.uk/WordImporter/WordImporter

The code is, as with the rest of LORLS, written in Perl. It makes heavy use of regular expression pattern matching and Z39.50 look ups to do its work. It is intended to run as a CGI script, so you’ll need to drop it on a machine with a web server. It also uses some Perl modules from CPAN that you’ll need to make sure are installed:

- Data::Dumper

- Algorithm::Diff

- Archive::Any

- XML::Simple

- JSON

- IO::File

- ZOOM

- CGI

The code has been developed and run under Linux (specifically Debian Jessie and then CentOS 6) with the Apache web server. It doesn’t do anything terribly exciting with CGI though, so it should probably run OK on other platforms as long as you have working Perl interpreter and the above modules installed. As distributed its looks at the public Bodleian Library Z39.50 server in Oxford, but you’ll probably want to point it at your own library system’s Z39.50 server (the variable names are pretty self-explanatory in the code!).

This script gives a couple of options for output. The first is RIS format, which is an citation interchange format that quite a few systems accept. It also has the option of JSON output if you want to suck the data back into your own code. If you opt for JSON format you can also include a callback function name so that you can use JSONP (and thus make use of this script in Javascript code running in web browsers).

We hope you find this script useful. And if you do feel up to tweaking and improving it, we’d love to get patches and fixes back!

The continuing battle of parsing Word documents for reading list material

At this year’s Meeting The Reading List Challenge (MTLRC) workshop, Gary Brewerton (my boss) showed the delegates one of our LORLS features: the ability to suck citation data out of Word .docx documents. We’ve had this for a few years and it is intended to allow academics to take existing reading lists that they have produced in Word and import them relatively easily into our electronic reading lists system. The nice front end was written by my colleague Jason Cooper, but I was responsible for the underlying guts that the APIs call to try parsing the Word document and turn it into structured data that LORLS can understand and use. We wrote it originally based on suggestions from a few academics who already had reading lists with Harvard style references in them, and they used it to quickly populate LORLS with their data.

Shortly after the MTRLC workshop, Gary met with some other academics who also needed to import existing reading lists into LORLS. He showed them our existing importer and, whilst it worked, it left quite alot entries as “notes”, meaning it couldn’t parse them into structured data. Gary then asked me to take another look at the backend code and see if I could improve its recognition rate.

I had a set of “test” lists donated by the academics of varying lengths, all from the same department. With the existing code, in some cases less than 50% of the items in these documents were recognised and classified correctly. Of those, some were misclassified (eg book chapters appearing as books).

The existing LORLS .docx import code used Perl regular expression pattern matching alone to try to work out what sort of work a citation referred to this. This worked OK with Word documents where the citations were well formed. A brief glance through the new lists showed that lots of the citations were not well formed. Indeed the citation style and layout seemed to vary from item to item, probably because they had been collected over a period of years by a variety of academics. Whilst I could modify some of the pattern matches to help recognise some of the more obvious cases, it was clear that the code was going to need something extra.

That extra turned out to be Z39.50 look ups. We realised that the initial pattern matches could be quite generalised to see if we could extract out authors, titles, publishers and dates, and then use those to do a Z39.50 look up. Lots of the citations also had classmarks attached, so we’d got a good clue that many of the works did exist in the library catalogue. This initial pattern match was still done using regular expressions and needed quite a lot of tweaking to get recognition accuracy up. For example spotting publishers separated from titles can be “interesting”, especially if the title isn’t delimited properly. We can spot some common cases, such as publishers located in London, New York or in US states with two letter abbreviations. It isn’t fool proof, but its better than nothing.

However using this left us with only around 5% of the entries in the documents classified as unstructured notes when visual checking indicated that they were probably citations. These remaining items are currently left as notes, as there are a number of reasons why the code can’t parse them.

The number one reason is that they don’t have enough punctuation and/or formatting in the citation to allow regular expression to determine which parts are which. In some cases the layout doesn’t even really bear much relation to any formal Harvard style – the order of authors and titles can some time switch round and in some cases it isn’t clear where the title finishes and the publisher ends. In a way they’re a good example to students of the sort of thing they shouldn’t have in their own referencing!

The same problems encountered using the regular expressions would happen with a formal parser as these entries are effectively “syntax errors” if treating Harvard citations as a sort of grammar. This is probably about the best we’ll be able to do for a while, at least until we’ve got some deep AI that can actually read the text and understand it, rather that just scan for patterns.

And if we reach that point LORLS will probably become self aware… now there’s a scary thought!

Happy birthday LORLS!

Fifteen years ago a reading list system (initially unnamed) was launched at Loughborough University. The system allowed staff to input reading lists item-by-item using a HTML form and display these web-based lists to our students with links to the library catalogue to check stock availability.

Since that time the system has undergone six major revisions. These revisions have extended the functionality to allow “drag-and-drop” reordering of lists, display of stock availability and book covers, importing of citations from Word documents and so much more! Also the system gained a name, LORLS.

So today we’re celebrating fifteen years of LORLS!

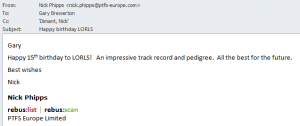

We’ve even had birthday greetings and cards from our friends at PTFS Europe and Talis.

Thanks guys!

Extending the Structural Unit Types available

One additional Structural Unit Type (SUT) that we have been asked for is Audio Visual (AV) material, e.g. CDs, DVDs, Film, etc. While we’ve manually added an AV SUT to our local instance we didn’t have an easy way to extend this to other instances of LORLS.

So to tackle this we have put together a quick Perl script that can be run from the command line which adds in the new AV SUT. If your LORLS install doesn’t have an AV Material SUT and you would like to add it then here are the instructions to do so:

- Back up your LORLS install (Don’t forget the database as this will be altered)

- Download the latest extendSUTs script (e.g. wget “https://blog.lboro.ac.uk/lorls/wp-content/uploads/sites/3/2014/11/extendSUTs”)

- Make the script executable (e.g. chmod +x extendSUTs)

- Run the script (e.g. ./extendSUTs –database=<database> –user=<database user>)

- When prompted enter the database user’s password

- If the script fails due to missing the Term::ReadKey Perl module then install it and try the script again (RedHat/CentOS should just need to run “sudo yum install perl-TermReadKey”)

- Once the script has run open a new browser session and try adding a new AV Material entry to a test list.