Peer assessment of conceptual understanding of mathematics

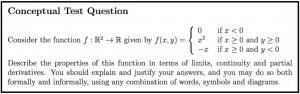

Ian Jones and Lara Alcock in the Mathematics Education Centre recently undertook an innovation designed to assess undergraduates’ conceptual understanding of mathematics. Undergraduates first sat a written test in which they had to explain their understanding of key concepts from the module. They then assessed their peers’ work online using an approach called Adaptive Comparative Judgement (ACJ). The ACJ system presented undergraduates with pairs of their peers’ work and they had decide which of the two answers demonstrated the better conceptual understanding of the test question. Each student completed 20 such paired judgements and the outcomes were used to construct a rank order of students.

Ian Jones and Lara Alcock in the Mathematics Education Centre recently undertook an innovation designed to assess undergraduates’ conceptual understanding of mathematics. Undergraduates first sat a written test in which they had to explain their understanding of key concepts from the module. They then assessed their peers’ work online using an approach called Adaptive Comparative Judgement (ACJ). The ACJ system presented undergraduates with pairs of their peers’ work and they had decide which of the two answers demonstrated the better conceptual understanding of the test question. Each student completed 20 such paired judgements and the outcomes were used to construct a rank order of students.

The researchers wanted to evaluate how well the students did at assessing their peers’ work. Mathematics experts were also asked to rank the scripts using ACJ and the outcomes of the peers and the experts were compared. Statistical analyses revealed that rank orders produced by both the peers’ and the experts’ were reliable and internally consistent. Moreover the peers performed similarly to the experts, showing that they were able to assess one another’s work to an acceptable extent. Due to the newness of the innovation grades were awarded to the students on the basis of the experts’ rank order, but in the future it should be possible to use the peers’ own ranking to assign grades.

To further evaluate the approach non-experts also produced a rank order using ACJ. The non-experts were non-maths PhD students who had never studied maths beyond GCSE level or equivalent. Surprisingly, even these non-experts performed quite well, matching the rank order produced by the experts to some extent. This suggests that generic academic skills such as clear presentation and structure are quite informative about students’ conceptual understanding of mathematics. Nevertheless, the non-experts did not perform as well as the undergraduates or experts, and unsurprisingly an understanding of maths is important for properly assessing conceptual understanding. This finding was backed up by follow-up interviews with participants. Experts typically talked about mathematical content when asked to explain how they came to their judgement decisions, whereas non-experts typically talked about surface features.

At this early stage the evaluation focused on technical implementation, as well as reliability and validity. However a key attraction of using ACJ for peer assessment is the potential learning benefits of students comparing pairs of their peers’ work, and this is something we intend to evaluate in future work. As one undergraduate said in a follow-up interview: “It is hard to judge other people’s work … Sometimes we think we understand, but we have to make sure that if someone else reads who has no clue what the concept is, by looking at the question they should be convinced it answers the question. So it is important to write in a good way. It is an improvement for me for my future writing.”

See previous blog post on ACJ.

[Footnote: The ACJ system used in the study is “e-scape”, owned and managed by TAG Developments.]