Developing consistent marking and feedback in Learn

Background

More and more Schools within Loughborough University are looking at ways in which they can develop consistency within marking and feedback. Additionally, they are moving towards online submission to support this. As a result, colleagues are looking at ways that they can use rubrics or grid marking schemes to feedback electronically in an efficient and timely manner.

Philip Dawson, (2017) reported that:

“Rubrics can support development of consistency in marking and feedback; speed up giving feedback to save time for feed-forward to students; and can additionally be used pre-assessment with students to enable a framework for self-assessment prior to submission.” (p. 347-360.)

There are several types of rubrics and marking guides available within Learn and these take on different forms within different activities. Each has different requirements and results. This can make the process of transitioning to online marking a daunting process and, as we found recently, requires a carefully thought out approach.

Loughborough Design School recently made the move to online submission and online marking using the Learn Assignment Activity. Following this decision, we ran several workshops to assist staff with making the transition and specifically a rubric workshop. This blog post explores, explains and offers some options to the issues we encountered in the School and that we are facing more widely across the University.

What is the challenge?

Staff are already using hard-copy versions of feedback sheets that replicate the aims of having a rubric (i.e. consistency of marking and feedback), but many of these existing rubrics do not neatly transition into the Learn Assignment Activity and require a blend of features.

For example, a common feature of rubrics is that as well as providing a set of levels for criteria they often have a space provided to put in a specific mark e.g. 9 out of ten for a specific piece of criteria. This level of granularity can be the difference between a 1st class honours degree and a 2:1 class degree and, crucially, it allows students the opportunity to see where they can gain marks. Rubrics in the Learn Assignment Activity do not allow for this type of granularity – you can assign a range to a level e.g. 60-70% but not a specific mark within this range.

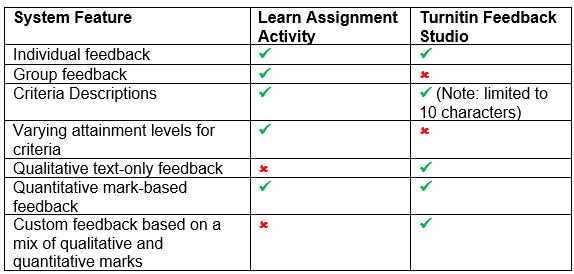

What’s the difference between the Learn Assignment Activity and Turnitin Feedback Studio rubrics?

What’s the difference between a rubric and a marking guide?

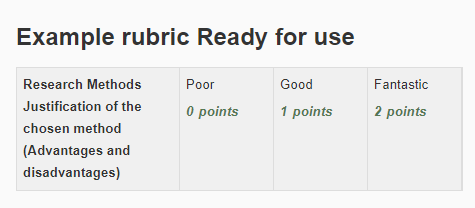

A rubric aligns marking criteria with fixed levels of attainment. For instance, a rubric may feature several criteria with attainment levels stretching from Fail, Poor, Average, good and excellent and within these levels a description will inform the student (and tutor) of where they have been awarded and lost marks:

A marking guide is more flexible and simplistic in what it offers. You still have criteria, but instead of levels, the tutor is expected to give a qualitative summary of how they feel the student performed and a mark for the criteria:

For both the rubric and marking guide, the criteria can be weighted to reflect the components importance in the overall mark.

Moving forward

The Centre for Academic, Professional and Organisational Development plan to offer a new Rubric workshop in Semester 2 of the 1819 academic year. The aim of this workshop will be to provide clear guidance on the benefits, use and technical considerations behind rubrics and marking guides. Existing workshops can be found on the following page: https://www.lboro.ac.uk/services/cap/courses-workshops/

We’ll continue to work with Schools and support academics on a one-to-one basis where requested. We recognise that every case is different and recommend getting in touch with the Technology Enhanced Learning Officer and Academic Practice Developer within your School for further support.

Discussions will also continue with Turnitin.co.uk and the Moodle (the system behind Learn) community so we can stay ahead of changes and new rubric features as they arrive.

References

[Phillip Dawson (2017) Assessment rubrics: towards clearer and more replicable design, research and practice, Assessment & Evaluation in Higher Education, 42:3, p.347-360.]